The gap between great content and real visibility almost always comes down to search engine basics.

Search engines sit between almost every question and almost every click. Studies show that over 80% of online experiences begin with a search query. When users discover and understand a page, traffic starts flowing. When they do not, even the best ideas stay hidden.

Search engines can seem mysterious and deeply technical, yet the core ideas are simple enough for any marketer, writer, or founder to grasp. Once those basics click, terms like crawling, indexing, and ranking stop sounding like jargon and start feeling like a clear checklist.

By the end of this guide, you will understand how a search engine works step by step, why that matters for business growth, and how to apply SEO fundamentals in a practical way.

So, let’s get started.

What is a search engine, and why does it matter?

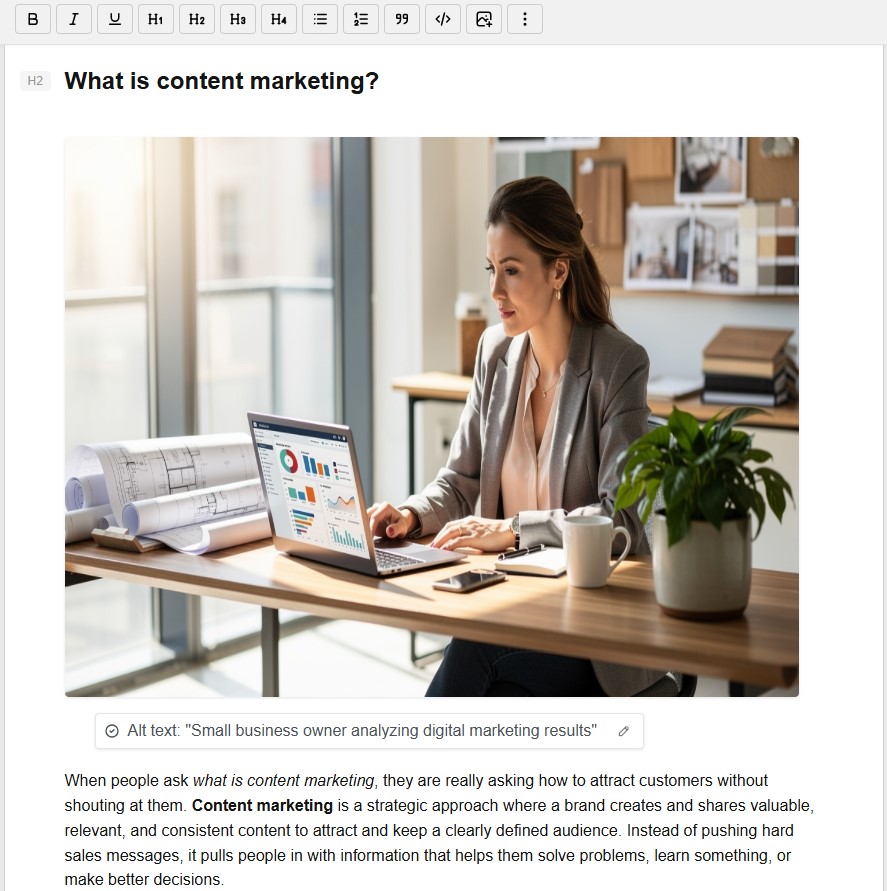

A search engine is a web-based tool that helps people find information on the Internet. It does not scan the live internet from scratch every time someone types a query. Instead, it searches a massive prebuilt database of pages, often called the index, and uses algorithms to decide which ones to show.

This difference between the index and the live web sits at the heart of search engine basics. When you type a keyword, you are really asking the search engine to look inside its index and return the best matches.

As of 2025, Google’s index alone contains information from billions of pages and occupies well over 100 million gigabytes in size across many data centers.

For a business, that index is where visibility lives. If a page is not in the index, it cannot appear in results at all. If it is in the index but looks weak or unclear to the algorithm, it will sit many pages deep, where almost nobody clicks.

That is why search engine optimization focuses so heavily on making pages easy to understand and appealing to search engines.

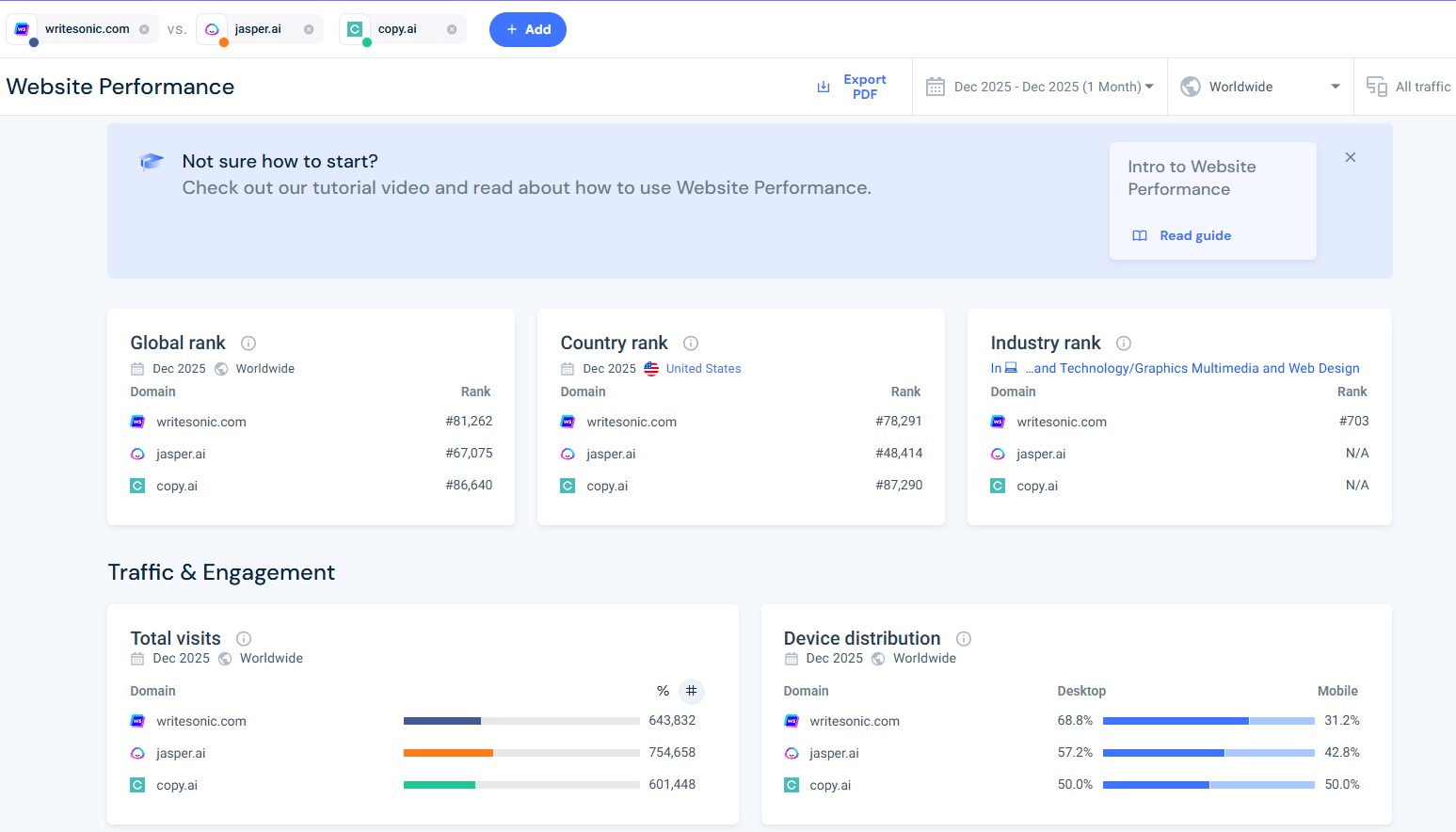

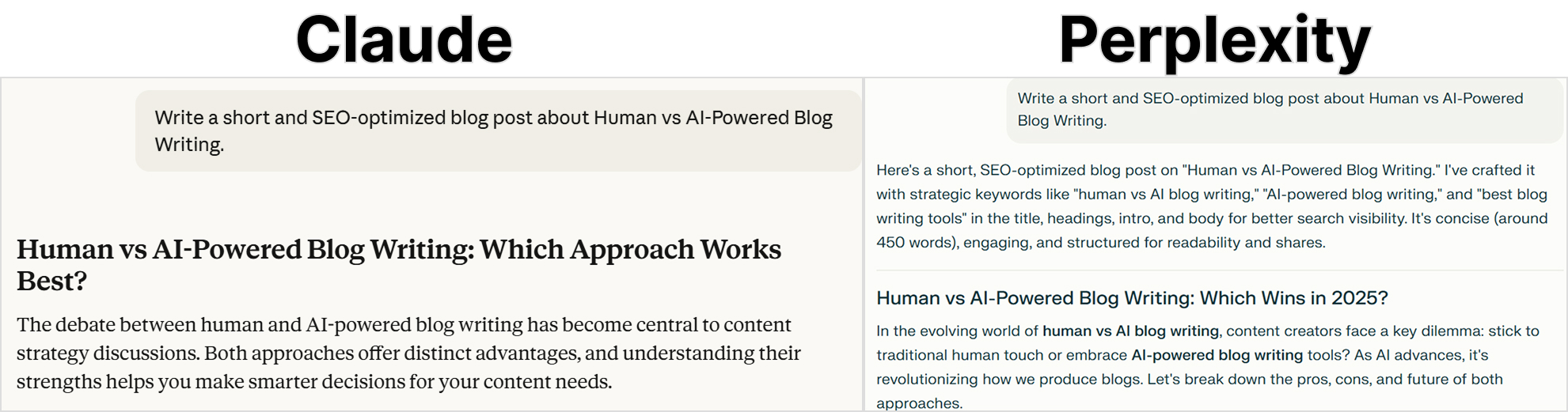

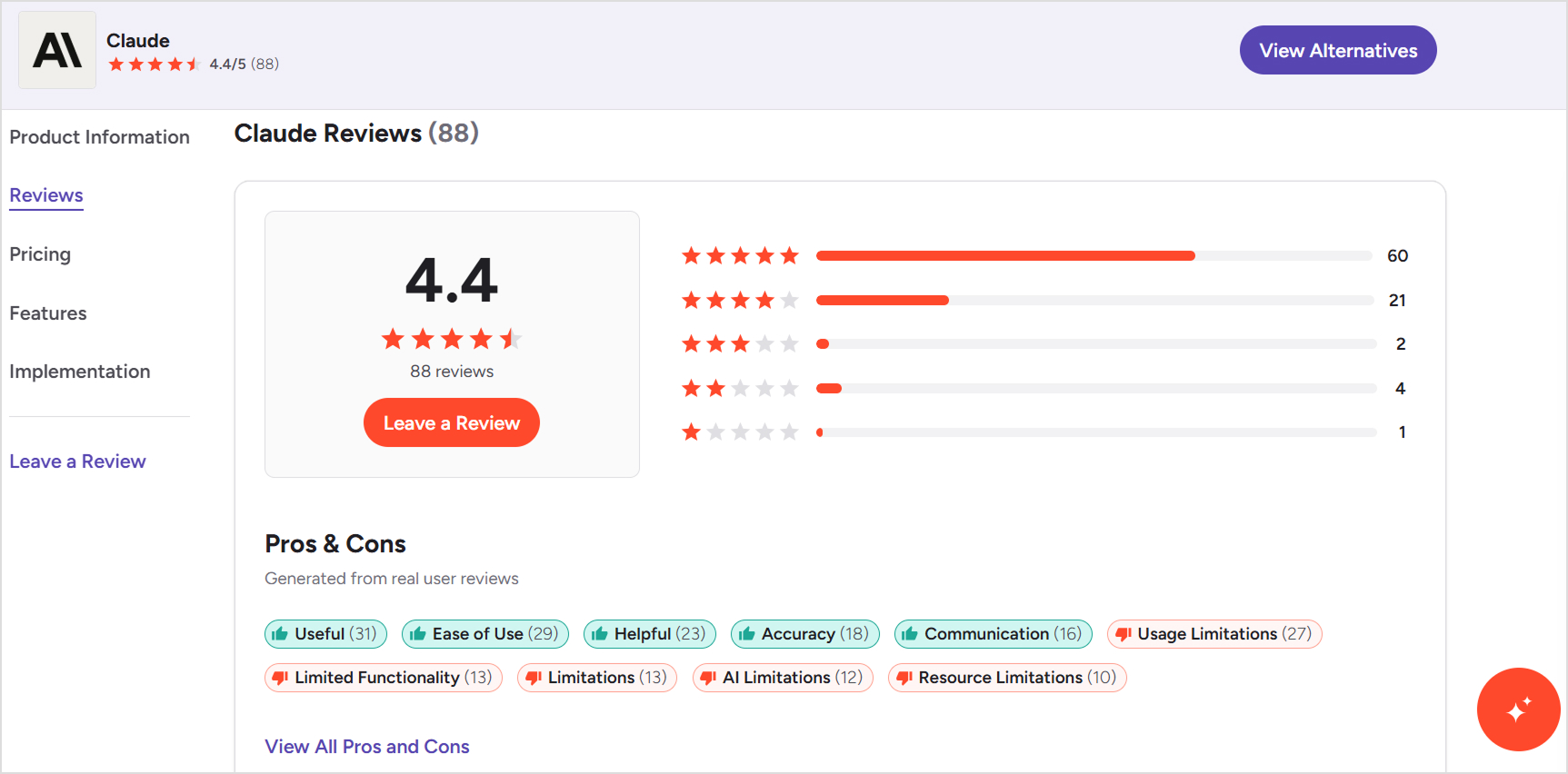

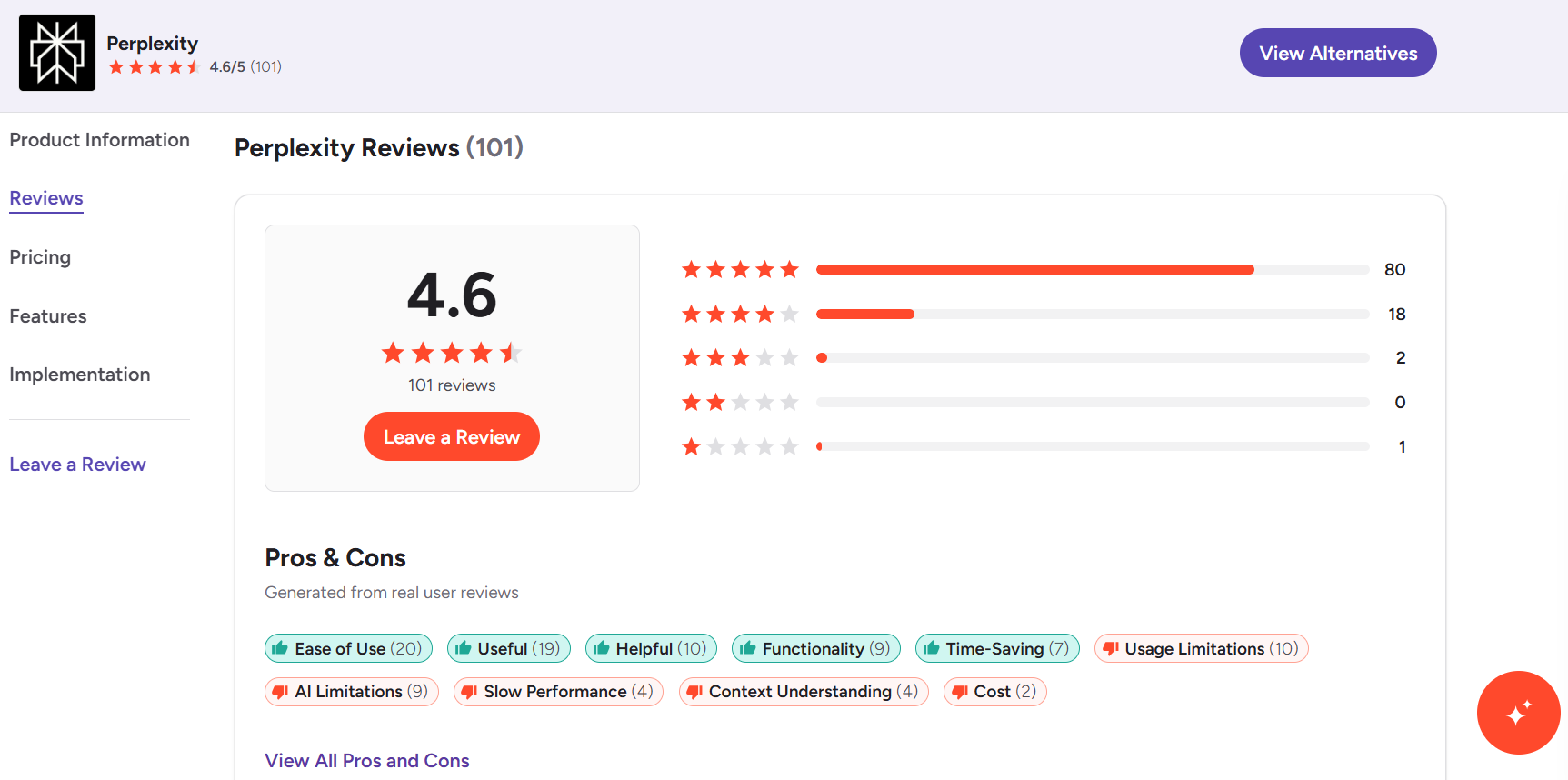

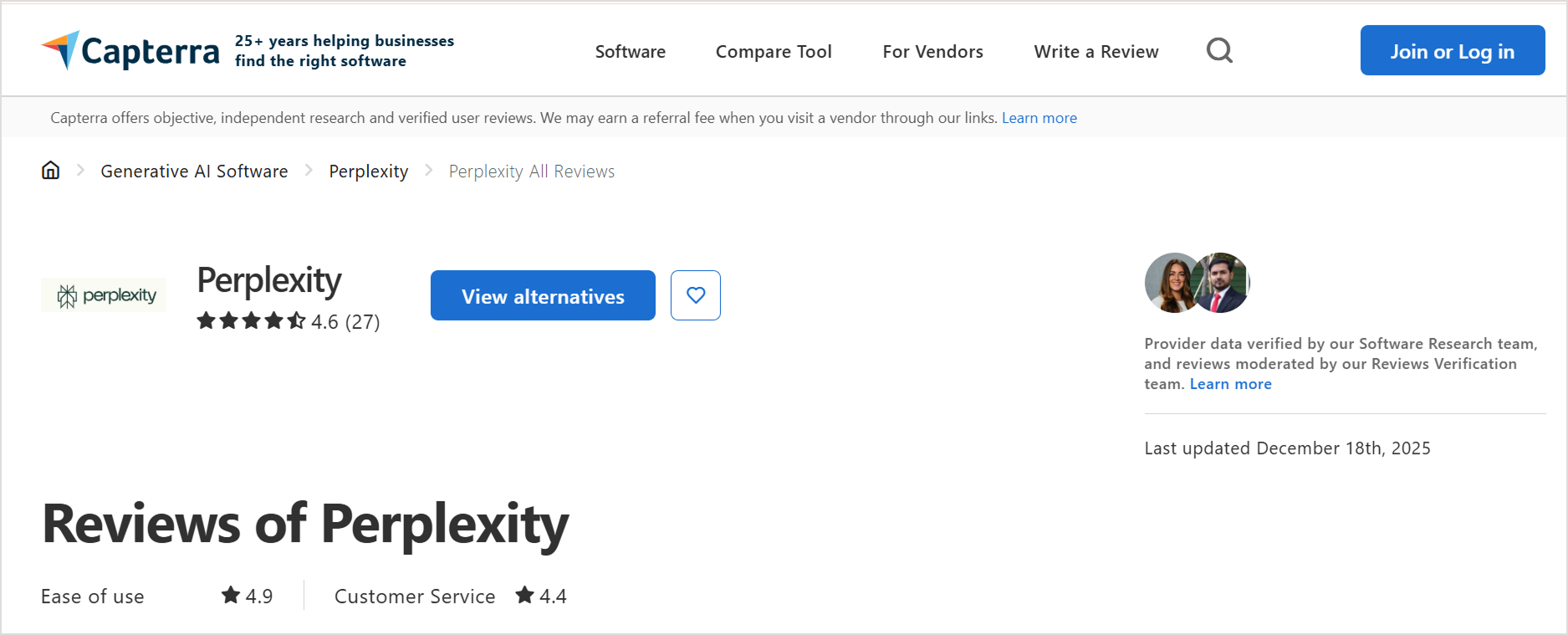

Different search engines use different indexes and algorithms, which is why Google and Bing’s results may vary for the same query. This is demonstrated in the statistical analysis of the search engines study, which compares major search platforms side by side.

For marketers and content teams, understanding the split between index and algorithm makes SEO planning easier.

How Google search engine works step by step?

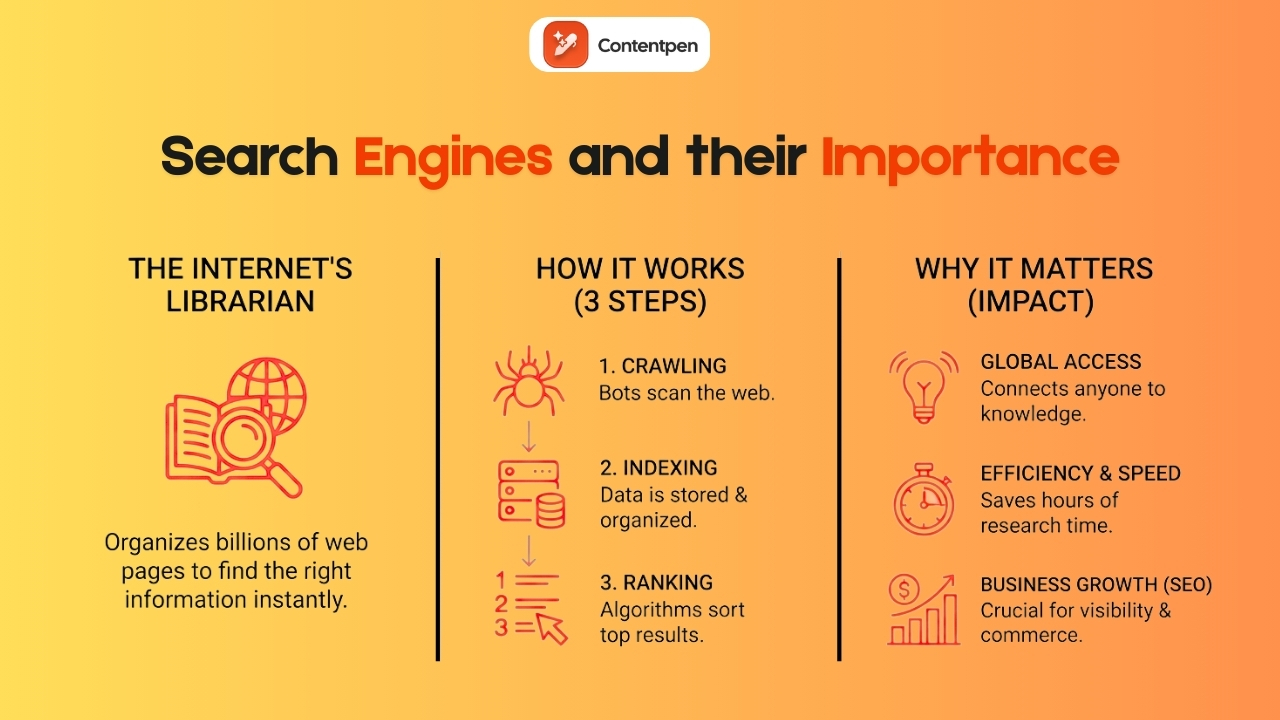

Every page that appears in a result goes through the same three‑stage process: crawling, indexing, and ranking (or serving). Google’s in-depth guide to how search works provides official documentation on each phase.

To put it briefly, the three stages function as:

- Crawling – Discovery, where bots follow links and read pages.

- Indexing – Organization, where those pages are analyzed and added to the database.

- Ranking and serving – Decision, where the engine matches a query to the best pages it already knows.

This process never stops. Crawlers revisit sites, indexes update, and rankings shift as content changes, links appear, and user behavior moves.

Not all pages make it through each stage. Some never get crawled, some are crawled but not stored, and some are indexed but rarely or never shown.

Here is a breakdown of those stages in the table below.

| Stage | What happens | Key question for you |

| Crawl | Bots discover URLs and fetch page content | Can search engines find and reach your pages? |

| Index | Systems analyze content and add it to the search database | Is your content clear and of high enough quality? |

| Serve | Algorithms match queries to indexed pages and order the results | Does your page look like the best answer? |

When you hear people talk about search engine basics or SEO for beginners, they are usually talking about how to help a site move smoothly through this path. Technical SEO covers crawling and indexing, while on-page and off-page SEO influence how pages perform in the serving stage.

Step #1: Crawling – How search engines discover your content

Crawling is the stage where search engines learn that your pages exist. Since there is no master list of all URLs on the internet, engines use automated programs called crawlers, bots, or spiders to follow links and fetch content. Google’s crawler is often called Googlebot.

These crawlers start with known URLs, fetch pages, and discover new ones by following links, gradually mapping the web over time.

In simpler words, crawling in a search engine is the raw data‑gathering step. For any business that cares about SEO basics, this is where visibility starts.

How crawlers find pages with links and sitemaps

Crawlers rely on two main pathways to find content:

- Internal links that connect one page to another

- Sitemaps that act like a directory you hand to the search engine

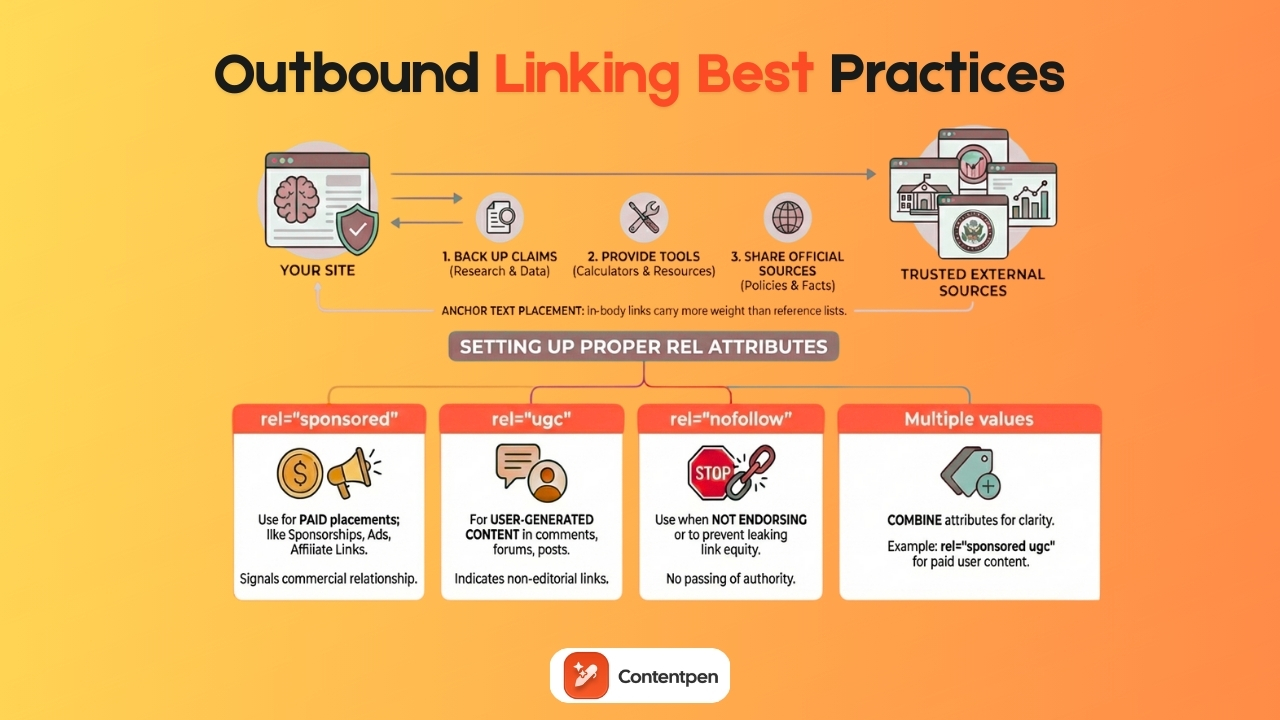

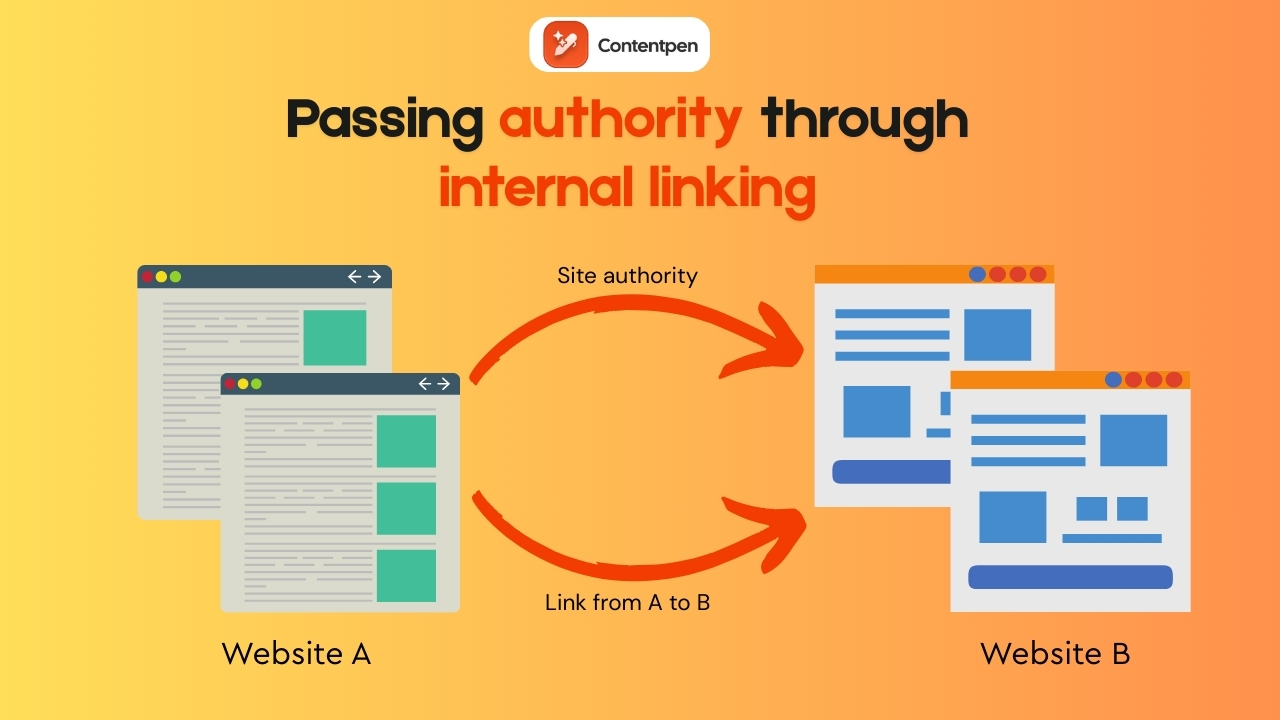

Internal and external links are the path crawlers prefer most of the time. When a crawler lands on a page, it looks through the links in the HTML and adds new ones to its to‑visit list. That means your internal linking structure matters a lot, because pages that are buried with no links pointing at them are much harder for bots to discover.

When you make sure important pages are linked from menus, category pages, and other high‑traffic content, you help crawlers find and revisit those key URLs.

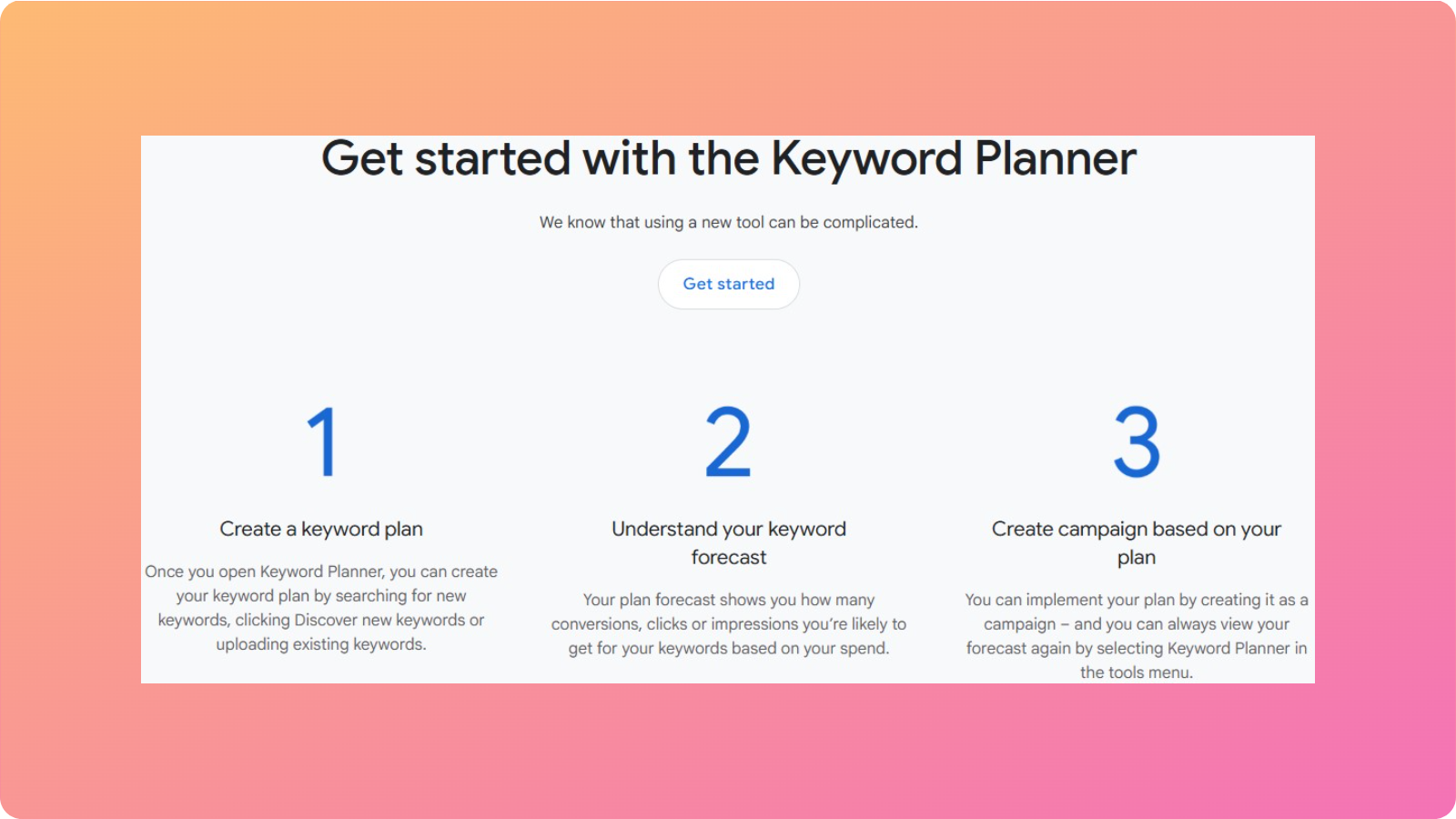

Sitemaps provide a second line of help. An XML sitemap lists important pages and optional details such as last-modified dates. Many modern content platforms can generate this file automatically, making it easy for teams without advanced technical skills to rank.

When you submit that sitemap through tools like Google Search Console, you give crawlers a direct map of what matters on your site. Using both methods together is far stronger than relying on only one.

Frequency rendering and limitations with crawlers

Crawling is not random. Search engines decide how often to visit each site, how many URLs to fetch, and how deep to go based on several signals.

Important factors include:

- Popularity – Pages and sites that attract many links and visits tend to get crawled more often.

- Update habits – A news site that publishes multiple times a day sends a clear signal to crawlers to return often, while a static brochure site might be checked far less often.

- Technical health – Clean site structure and fast server response let crawlers fetch more pages without strain.

Modern crawlers do more than read HTML. Googlebot, for example, can render pages with a headless browser similar to Chrome. That means it can run JavaScript, load dynamic content, and see most of what a human visitor sees. This is very important for single‑page apps and sites that depend on scripts for core content.

Crawling still has limits. Robots.txt files can block entire folders or an entire site if misconfigured. Pages behind logins or paywalls are off‑limits.

Also, orphan pages with no internal or external links may never be found, and slow or error-prone servers can cause crawlers to back off.

Step #2: Indexing — How search engines organize and store information

Indexing begins after a page has been crawled. At this point, the search engine tries to understand the content and decide where and how to store it in the index.

During indexing, the engine processes text, layout, links, and structured data. It records which words appear, which phrases stand out, and how the content is organized with headings, lists, and other markup.

It also looks at signals such as language, country targeting, and mobile readiness, all of which are now considered important ranking factors.

What information gets stored in the index

When a page is indexed, the system does not keep just one flat copy of the text. It stores several kinds of information that help with matching and ranking:

- Words and phrases – Which terms appear on the page, how often, and in which positions. Titles, headings, and early paragraphs carry more weight than text in footers.

- HTML and metadata – Title tags, meta descriptions, header tags (H1, H2, H3), and image alt text all provide clues about the topic. Schema markup can describe products, events, articles, How-to guides, etc., in a much more structured way.

- Broader signals – Primary language, likely geographic focus, freshness, mobile friendliness, and engagement patterns for similar pages. Over time, backlink information and user interactions also contribute to the stored profile for that URL.

Common filler words, often called stop words, are sometimes ignored to save space and make indexing more efficient.

Canonicalization and managing duplicate content

The web overflows with near‑duplicate pages. The same content can appear at multiple URLs because of tracking parameters, print views, mobile versions, or simple copy‑paste behavior. Search engines need a way to sort through these clusters so that results are not filled with duplicate pages.

Canonicalization is the process of selecting a single version from a set of duplicates. The engine tries to find the best representative page and treats that URL as the primary one for ranking purposes. Other versions are still noticed but are treated as alternates.

Duplicates can arise for many reasons. For example:

- An online store might show the same product under different categories with different URL paths.

- A blog might have both www and non‑www versions, or show the same article with and without tracking codes.

- Sometimes mobile and desktop versions live on separate subdomains.

You can help the engine choose the correct page by using canonical tags.

A rel=”canonical” tag in the HTML head points to the preferred URL for that content.

When used well, this tag keeps link equity from splitting across many copies and makes ranking signals clearer.

Common indexing problems and fixes

Even when crawling looks healthy, pages can fail to make it into the index. Most of the time, this comes down to content quality, blocking rules, or site structure.

- Thin content – If a page has only a few lines of text or feels spammy, search engines may mark it as crawled but not indexed.

- Noindex directives – Meta robots directives such as noindex are useful on thank‑you pages or admin areas that should be excluded from search results. Problems arise when they end up on important pages by mistake during a template change or redesign.

- Complex architecture and heavy JavaScript – If key content only appears after user actions, or if the HTML structure gives little hint about the topic, the engine may struggle to understand the page.

Creating helpful content is usually the most reliable long‑term fix for businesses and platforms of all types. However, not all businesses can generate such content or create such user experiences.

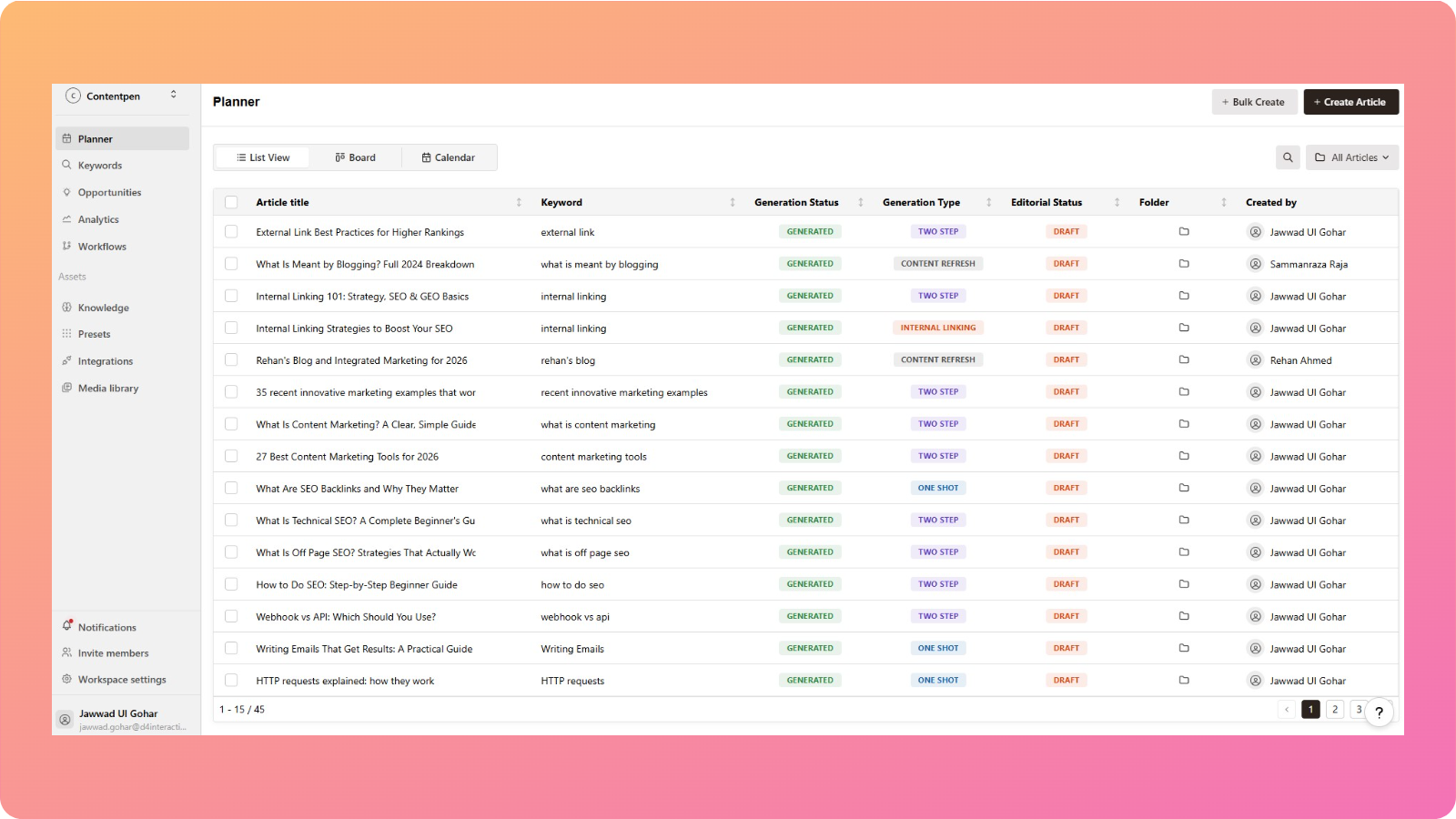

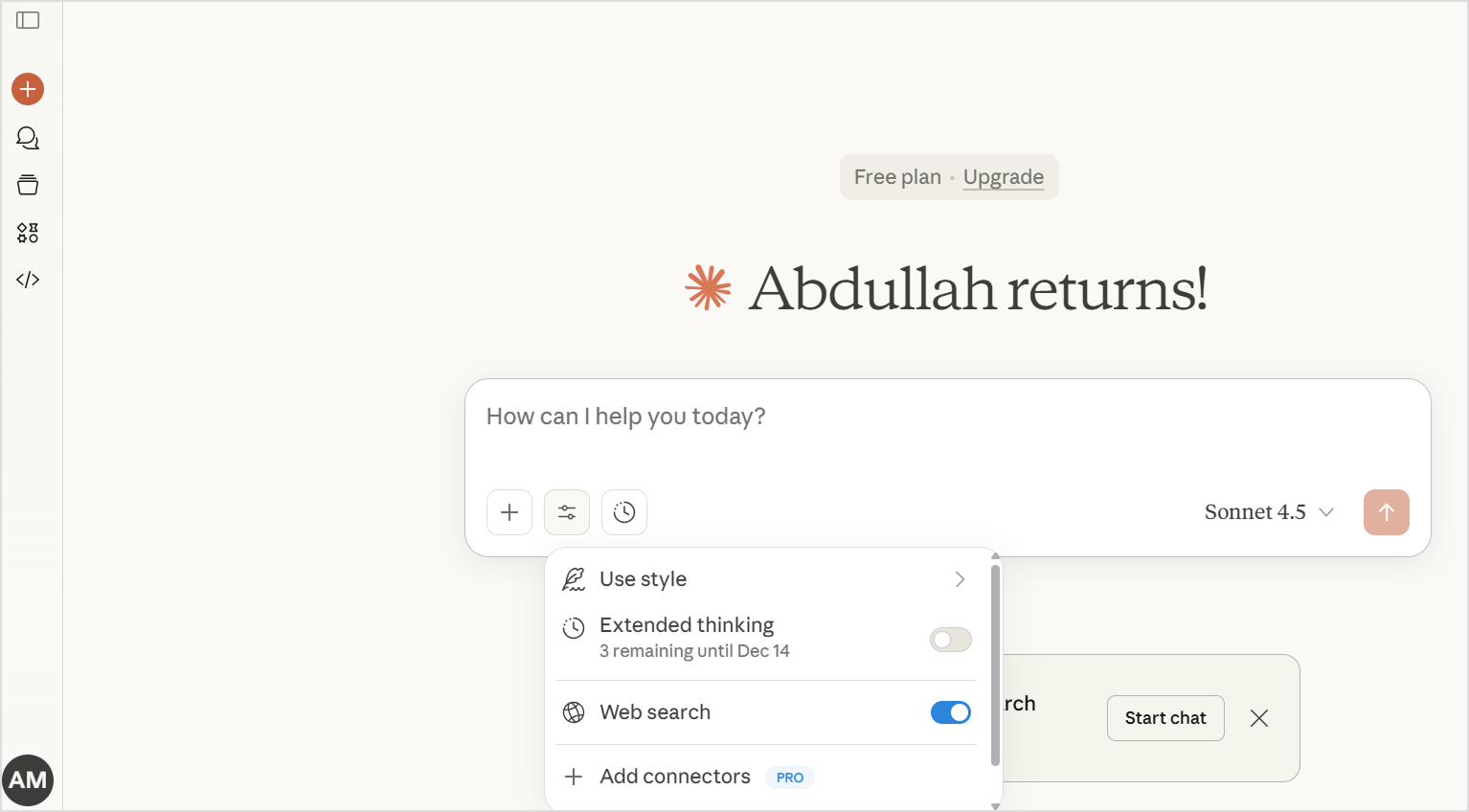

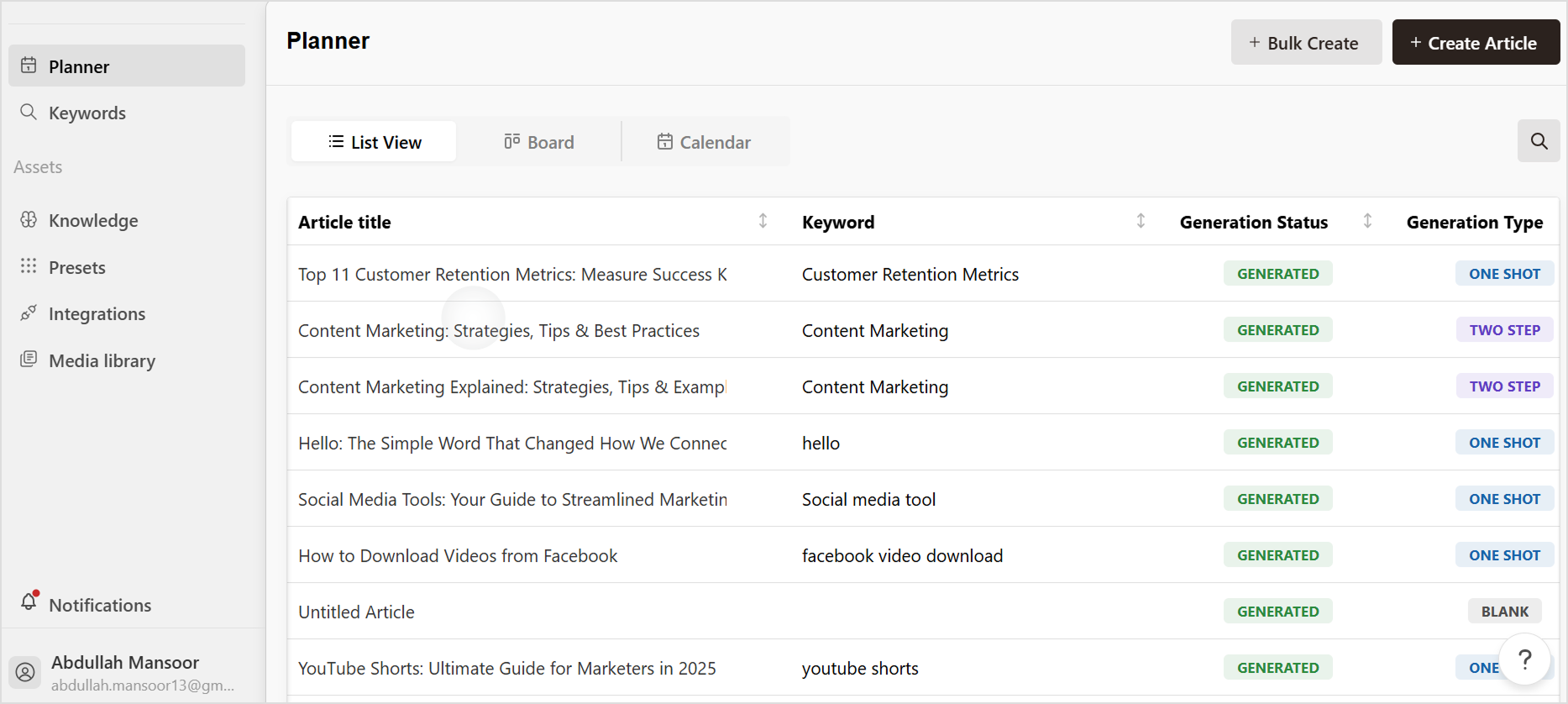

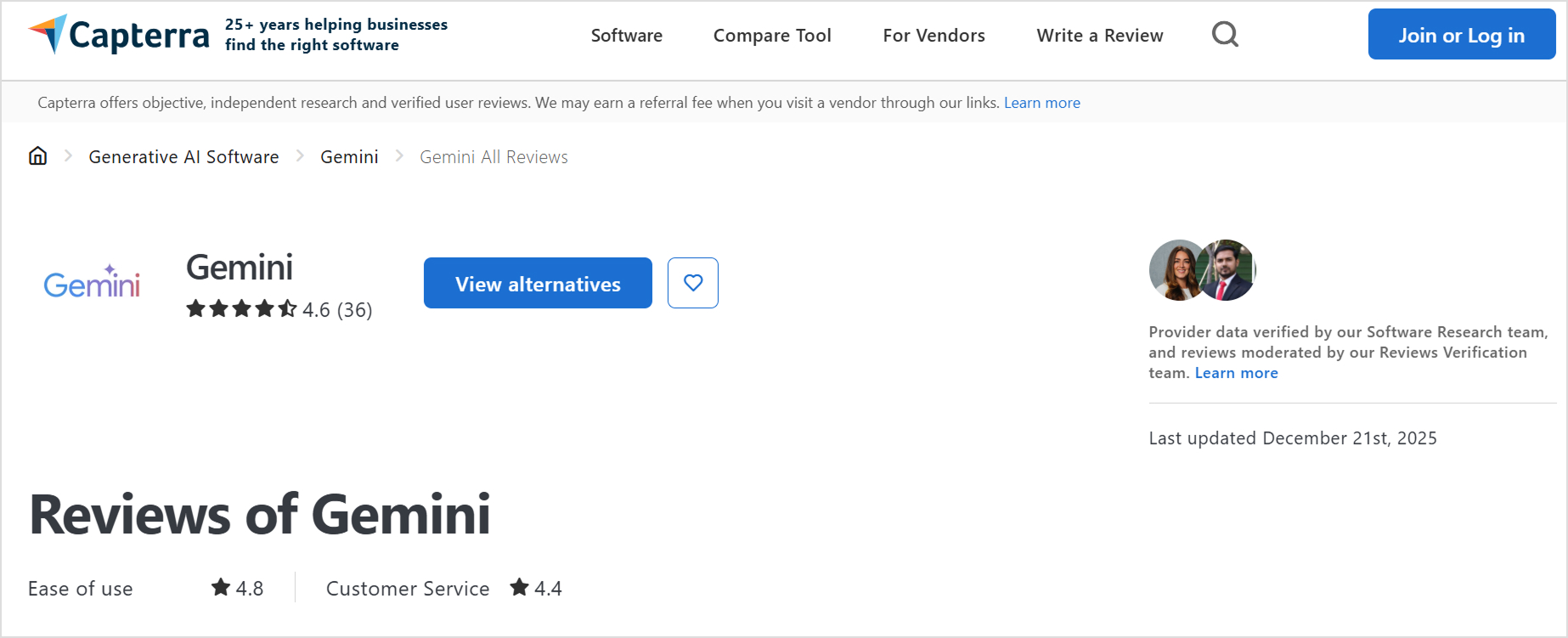

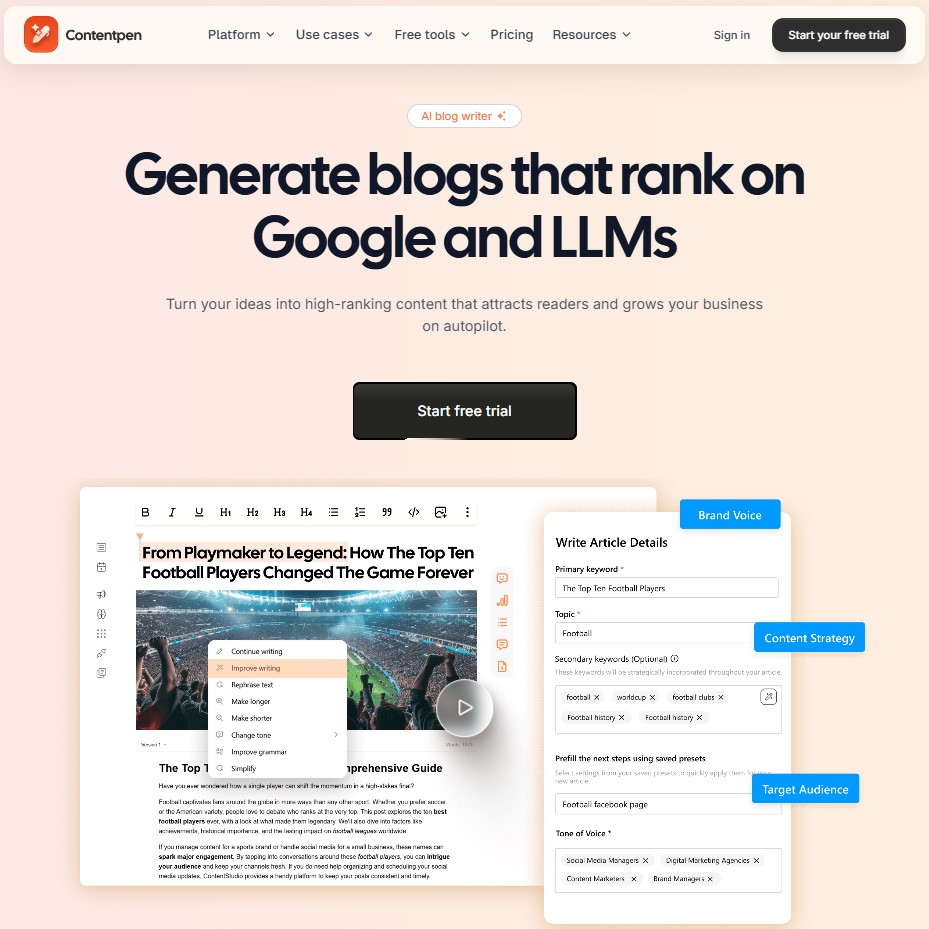

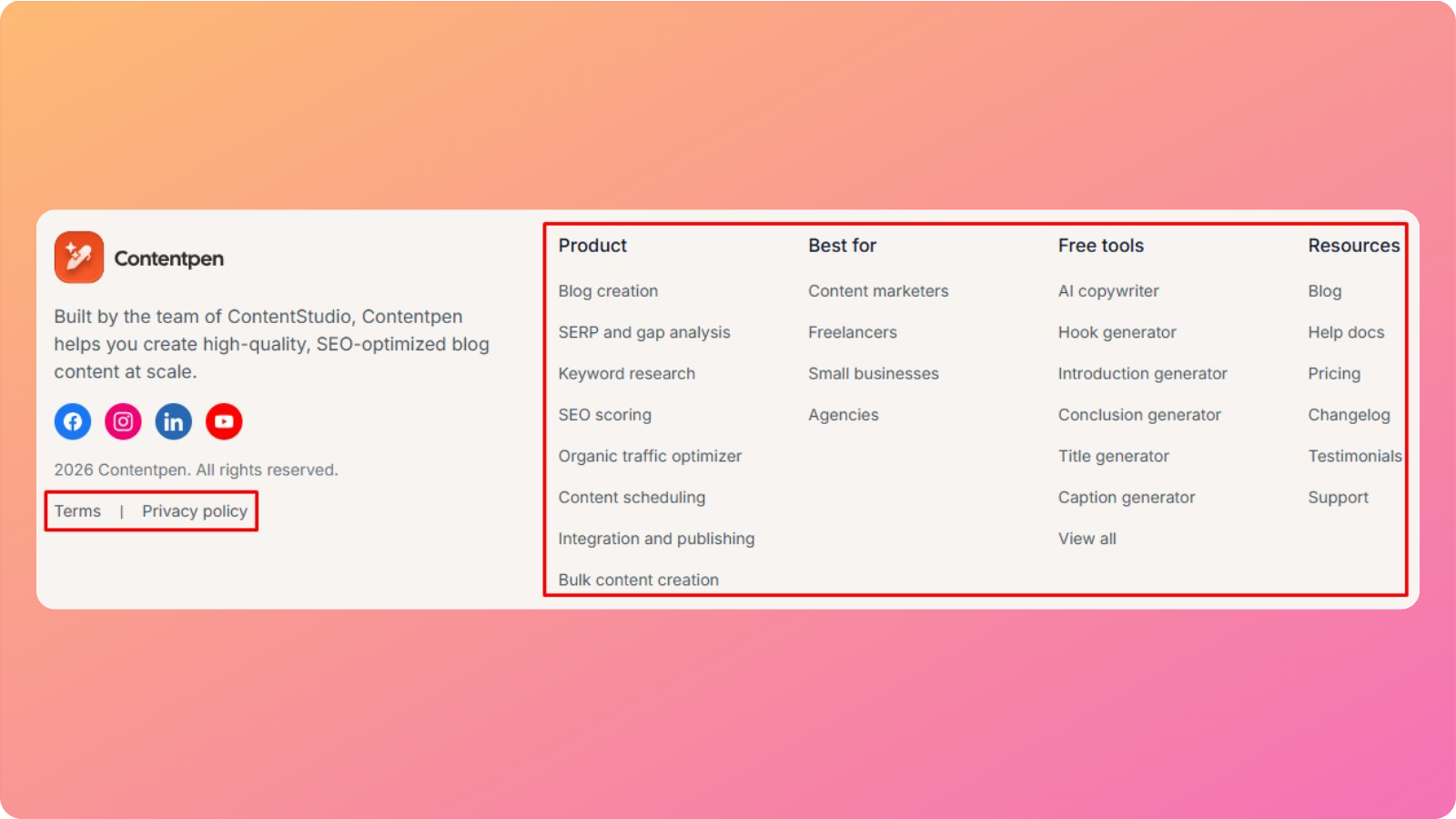

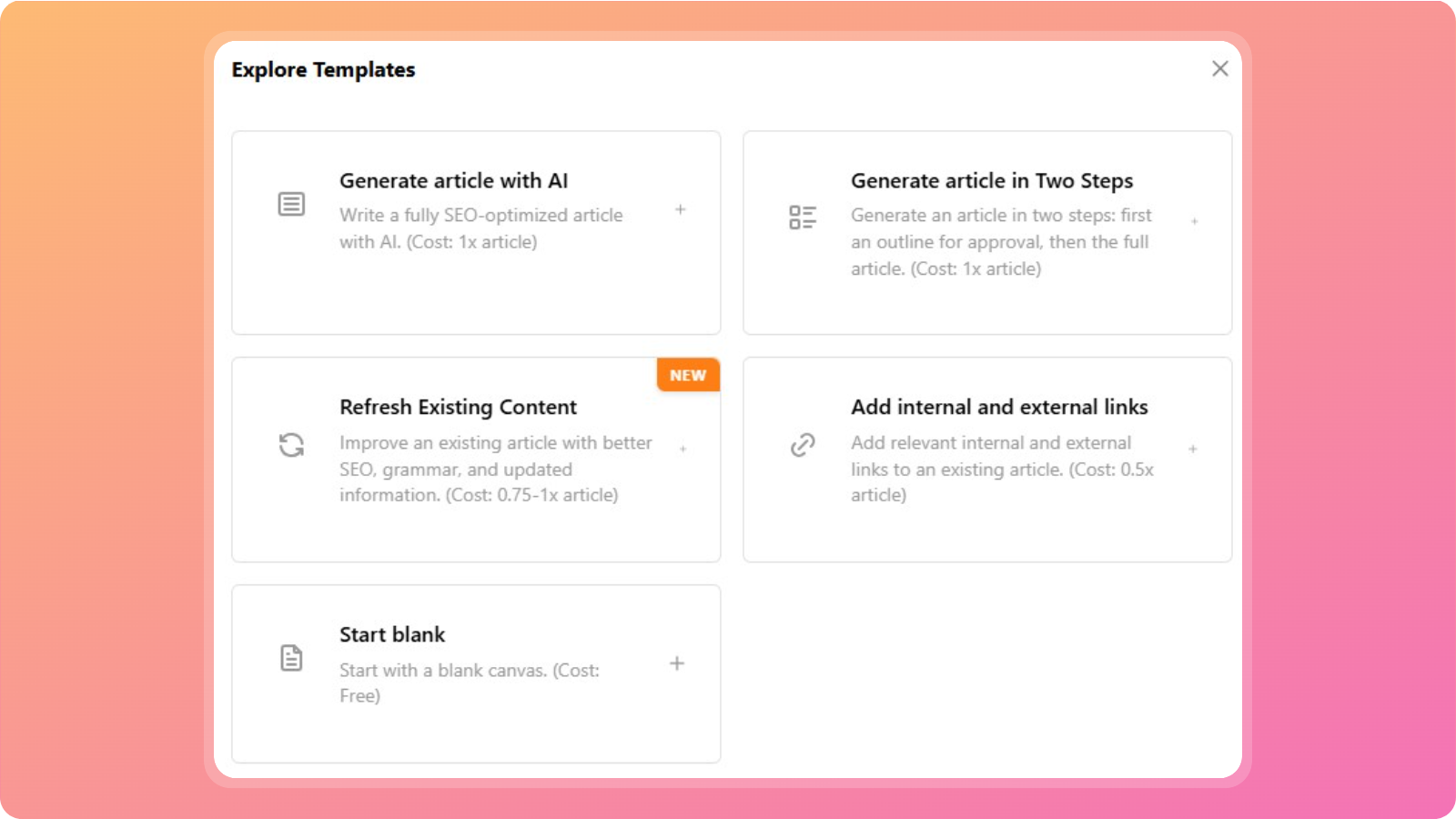

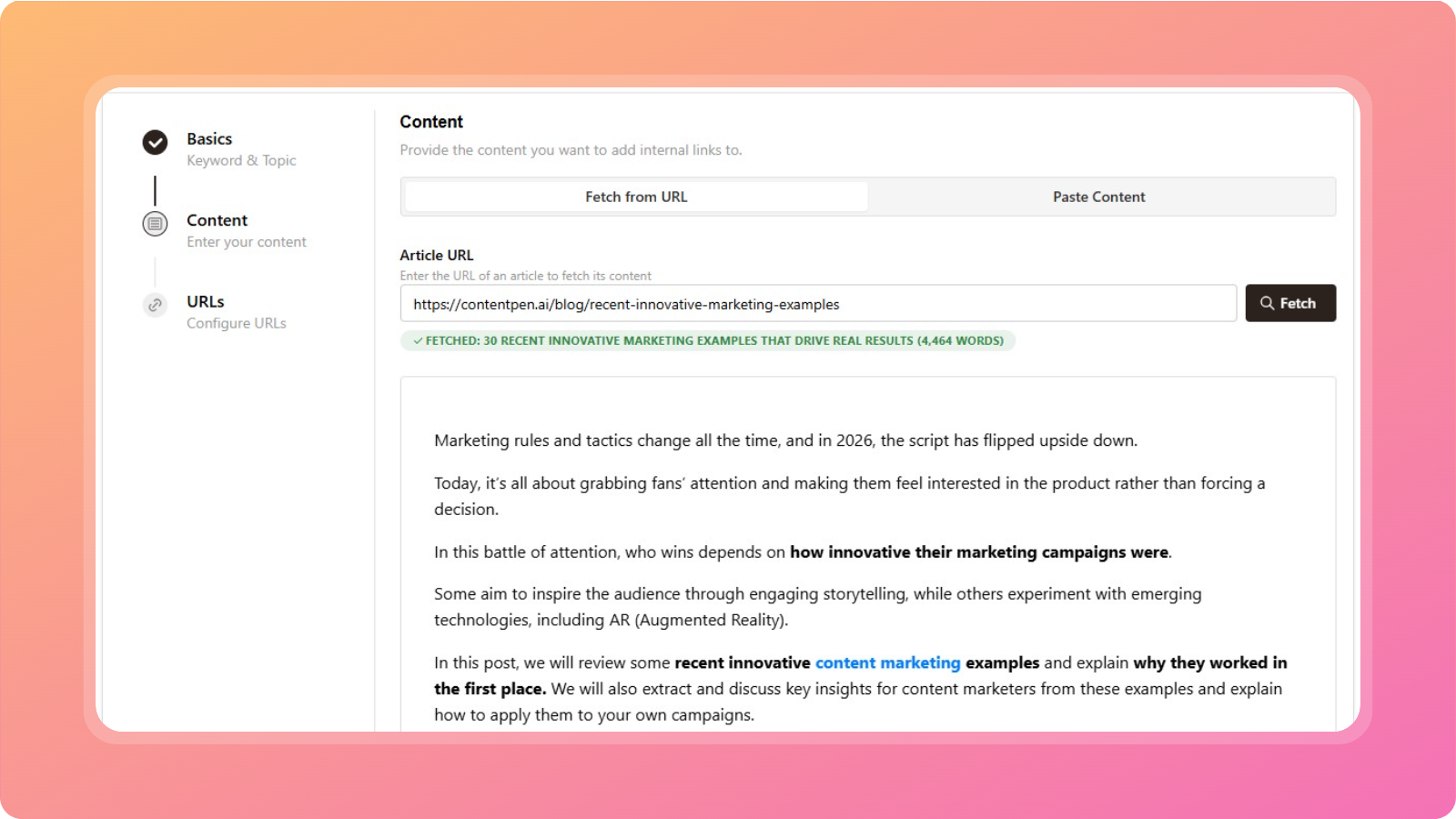

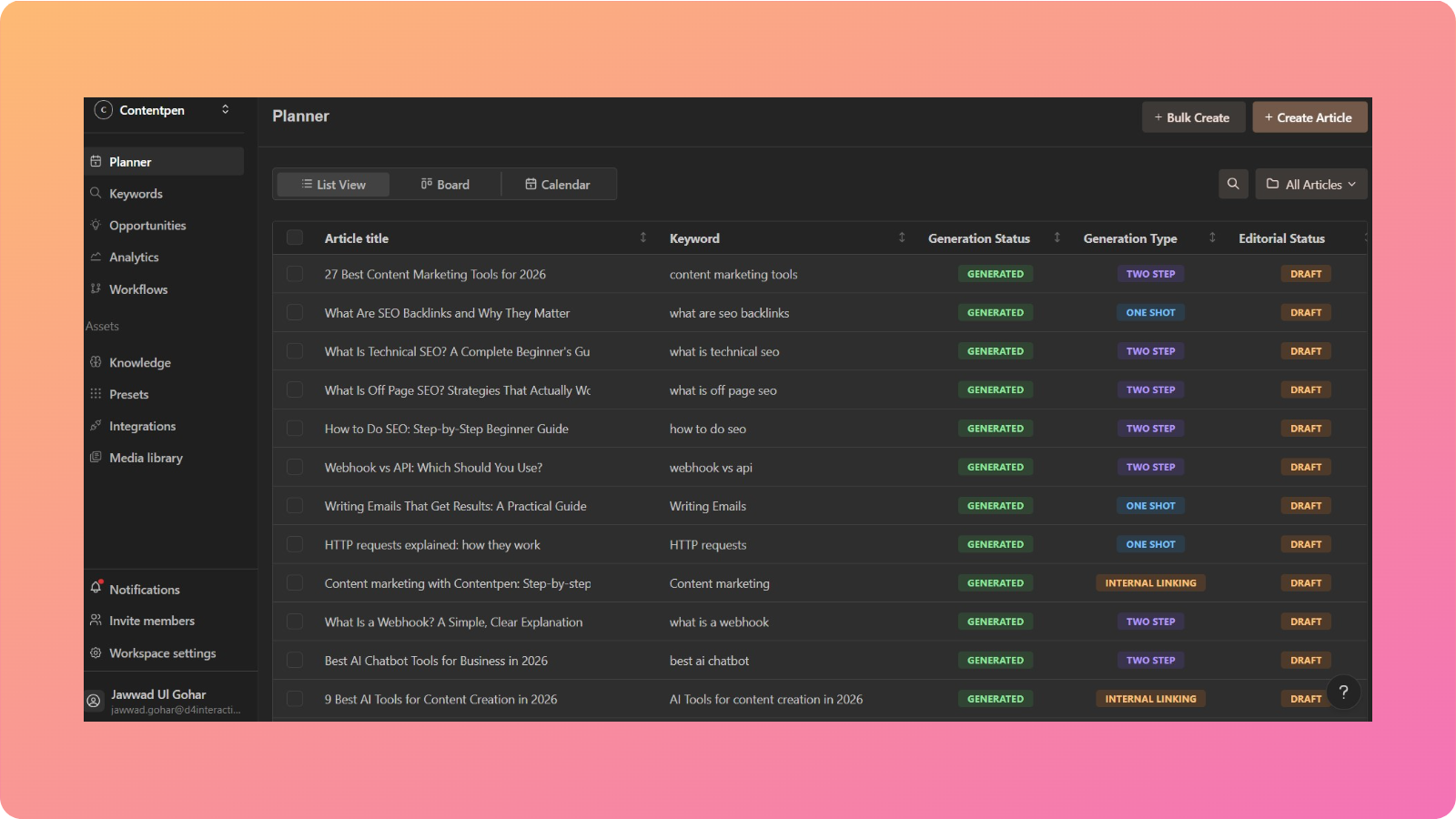

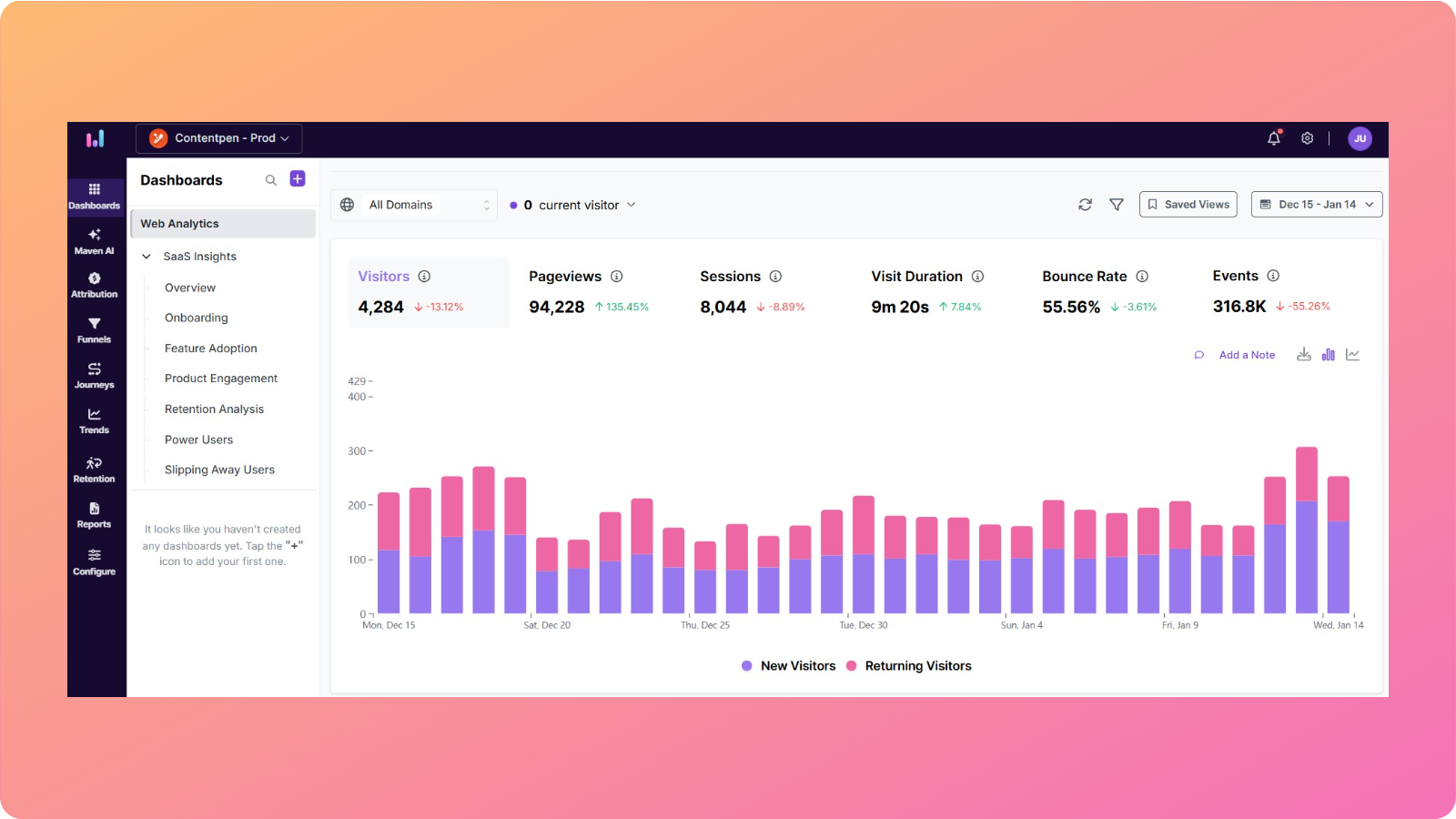

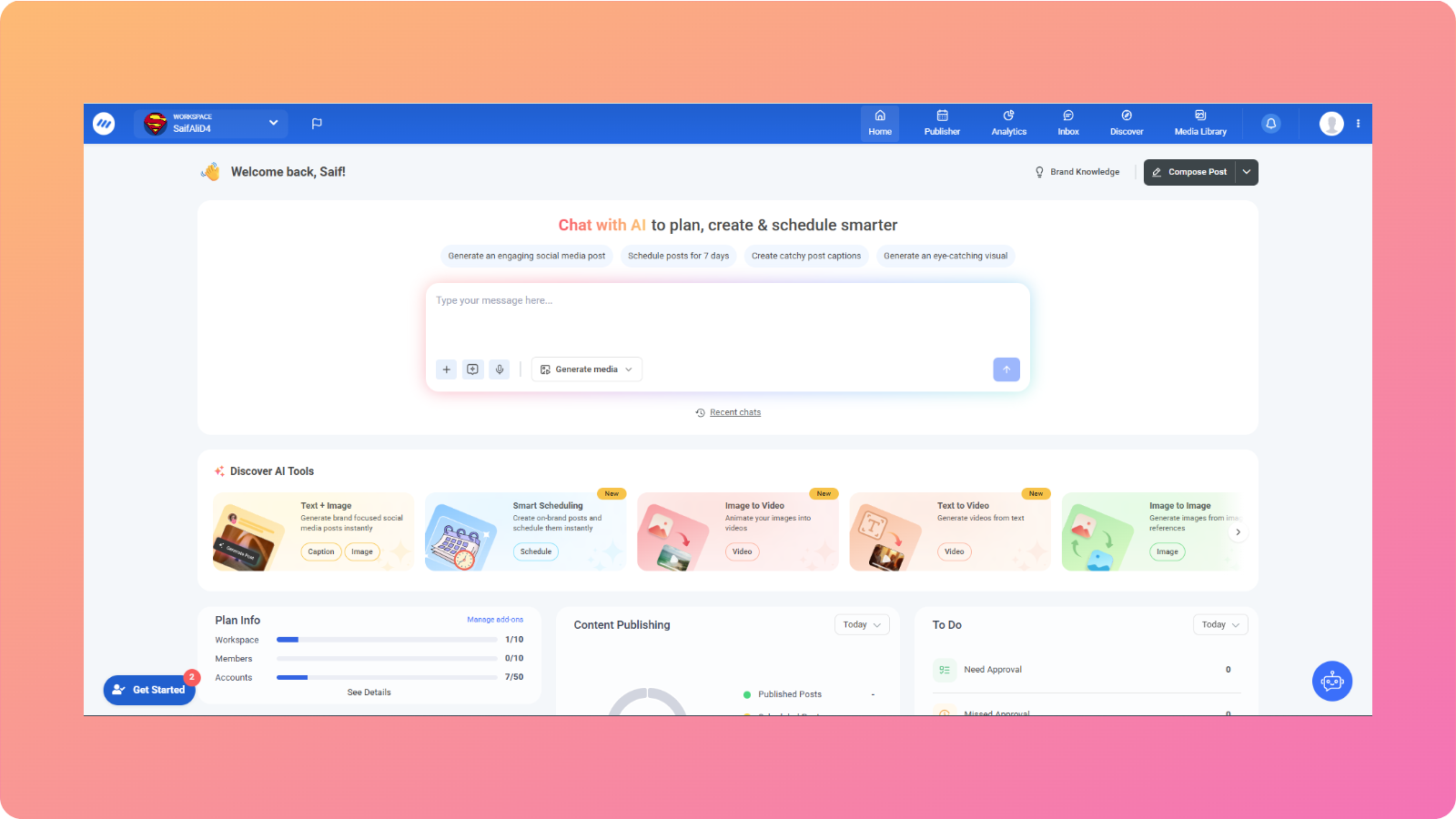

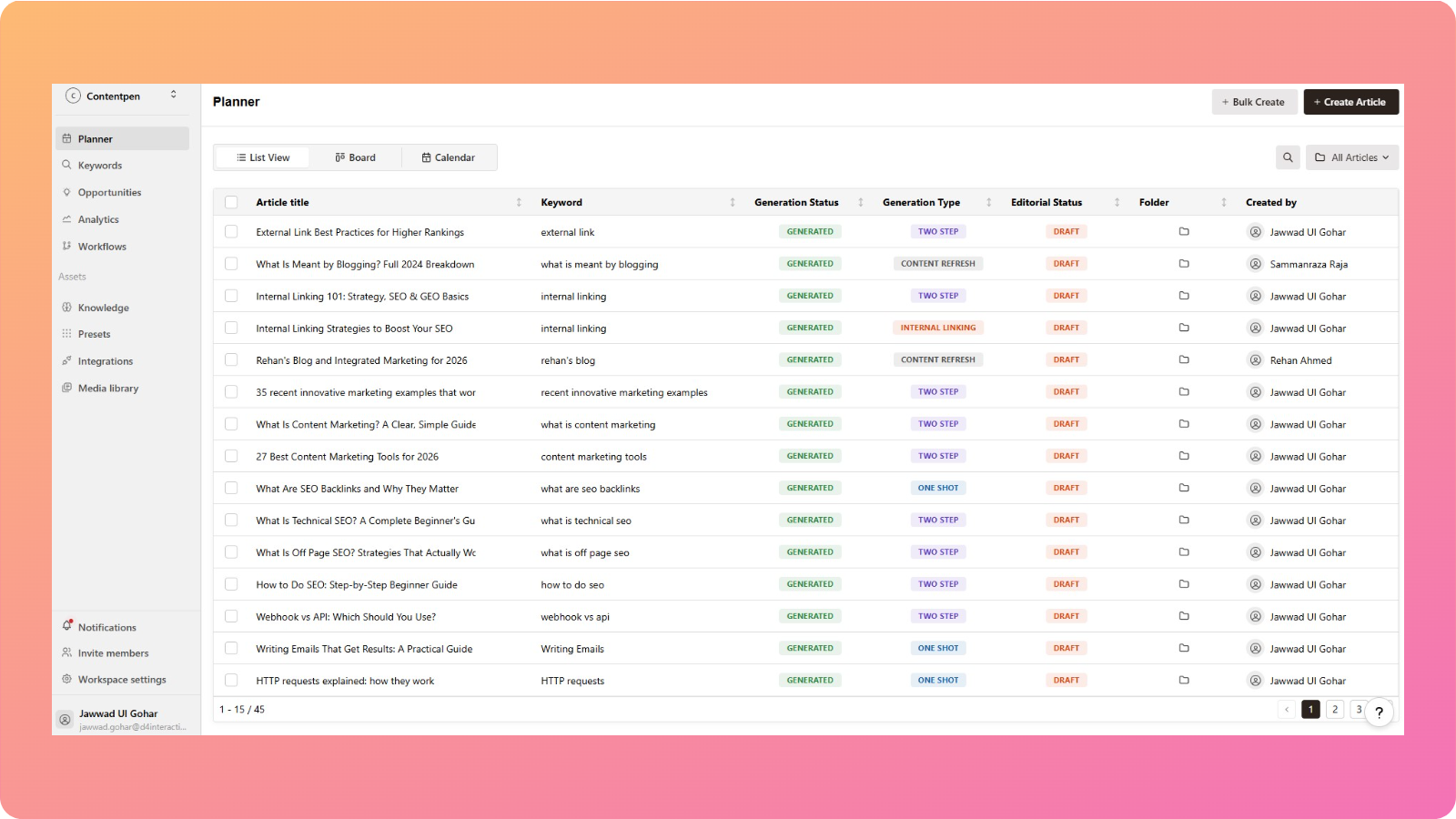

Which is why you need tools like Contentpen to help. It automatically generates high-quality, beautifully structured SEO and GEO-optimized content at scale while handling internal and external linking.

The best part is that the tool also supports integrations to your favorite CMS platforms, such as WordPress, Ghost, Wix, and others. So, you don’t need to switch tabs when publishing content and always meet your deadlines.

Step #3: Serving results — How search engines decide what you see?

Ranking and serving is the stage most people notice. It is what happens after someone types a query into the search box and presses enter. The search engine now has to choose which of its billions of indexed pages to show and how to order them.

The process starts with query processing. The system parses the user’s input, infers intent, converts words into its internal numerical form, and compares it against the index. It looks for pages that match not only the literal keywords but also related concepts and common patterns for that type of search.

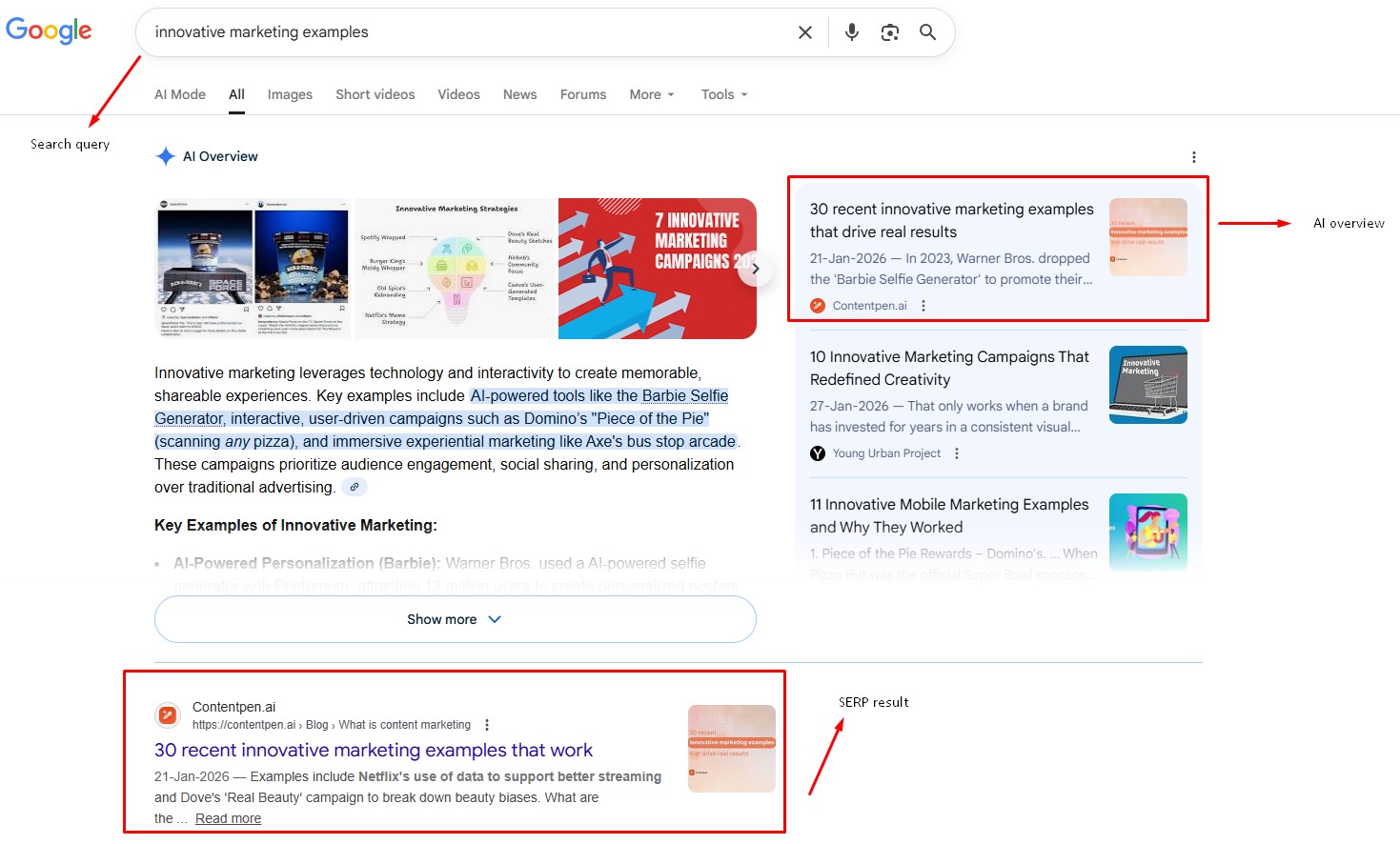

The results appear on the Search Engine Results Page (SERP). This page can include standard blue links, local map results, image carousels, videos, product cards, and featured snippets that try to answer the question right away. The layout changes based on what the engine believes the searcher wants in that moment.

Behind the scenes, hundreds of ranking factors influence which pages rise to the top. These factors fall into groups such as relevance, content quality, authority, user context, and technical health.

Query processing from the search box to the results

When a query comes in, the search engine first cleans and interprets it. It may correct spelling, expand abbreviations, or guess that certain words form a known phrase. Then it converts that processed query into the same kind of numerical tokens used in the index.

Next, the engine uses math to compare the query tokens with the tokens stored for each page. It looks for close matches, related terms, and patterns that signal a strong answer. This matching step happens extremely quickly.

Search operators give users more control when searching for specific information. Typically, this is how to use search engine for routine tasks in a daily workflow:

| Search operator | What it does | Example |

| “” | Search for the exact phrase that mentions this word or phrase | “NVIDIA” |

| OR / I | Search for results related to either one term or the other | NVIDIA OR AMD |

| AND | Search for results related to both terms mentioned | NVIDIA AND AMD |

| – | Search for results that don’t mention a specific word | NVIDIA -AMD |

| Site: | Search for results from a specific site | Site:NVIDIA.com |

| in | Convert one unit to another | £84 in USD |

There are many more search operators you can use to narrow results and find the information you need quickly.

These operators, mainly Site: and “”, are useful for competitive research, content audits, and technical checks. These also help you see how many of your pages are visible to the audience, and which require your attention for editing.

The main ranking factors and what really matters

While search algorithms use hundreds of inputs, many of them boil down to a few main themes. If you keep these in mind while planning content and technical work, you can cover most of what matters for search engine optimisation basics:

- Relevance – Does the page match the searcher’s intent and query? Pages that repeat a phrase without real depth often lose out to pages that answer questions in a clear, thorough way.

- Content quality – Search systems look for signs of expertise, author bylines that make sense for the topic, clear sourcing, and original thought. They pay attention to grammar, readability, and structure because those details affect how helpful a piece feels.

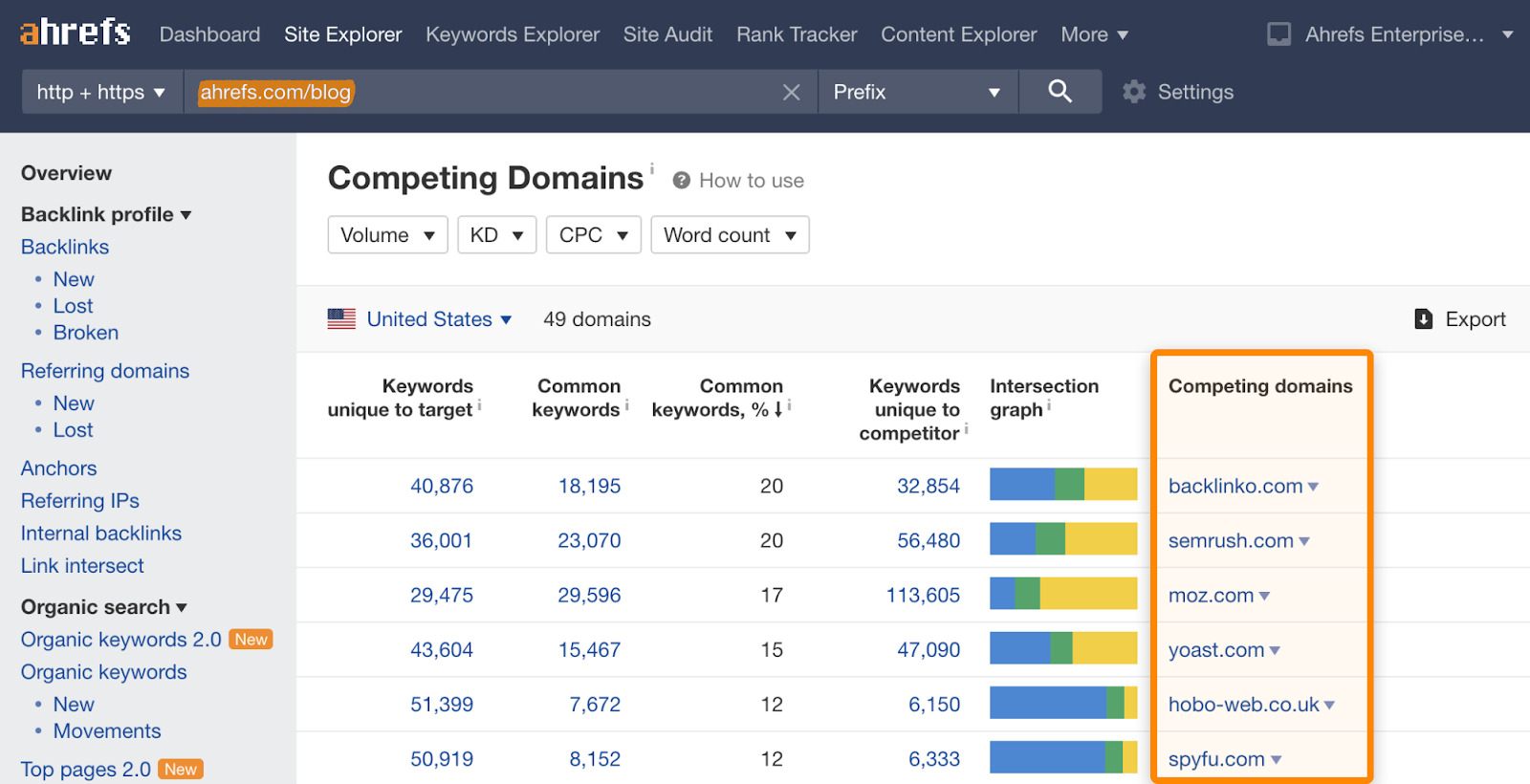

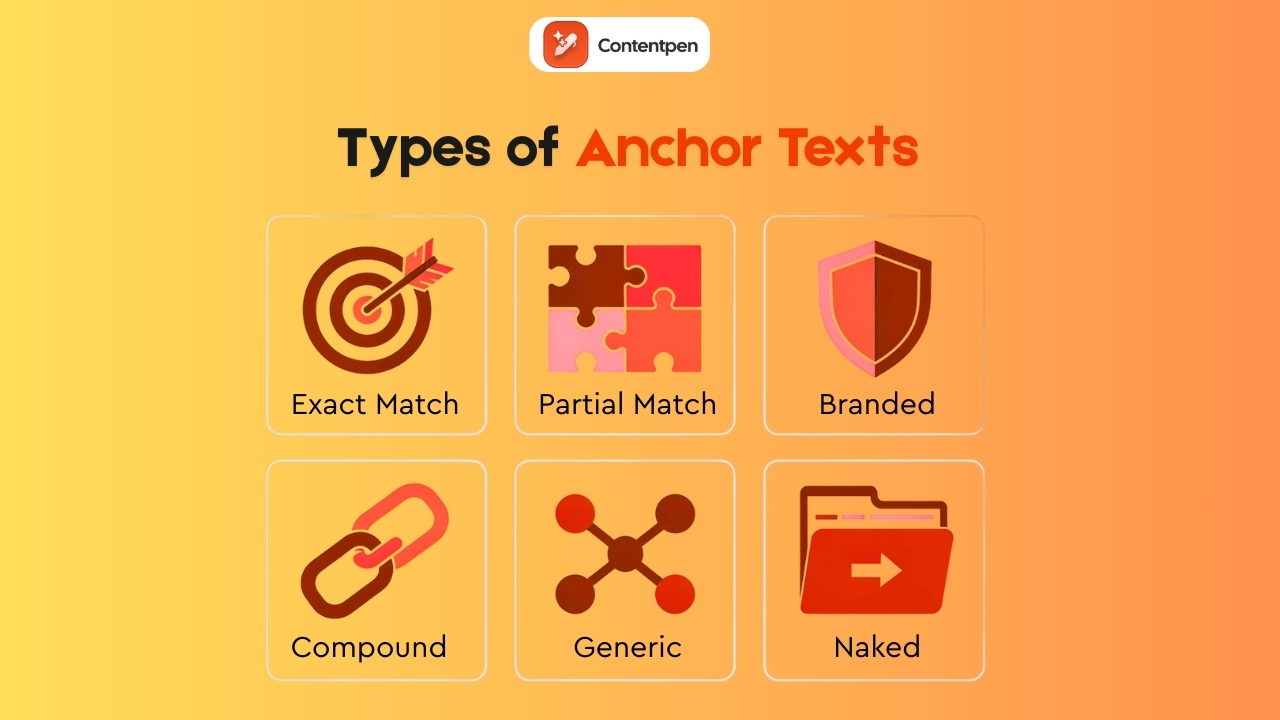

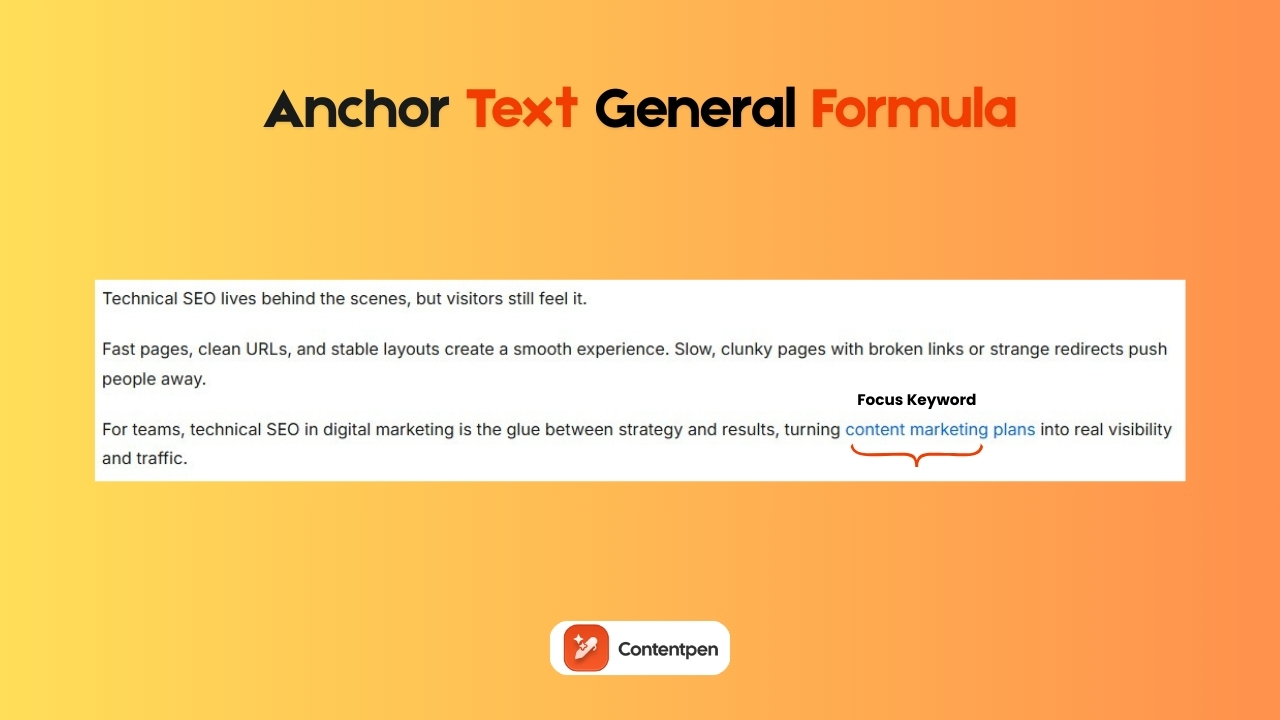

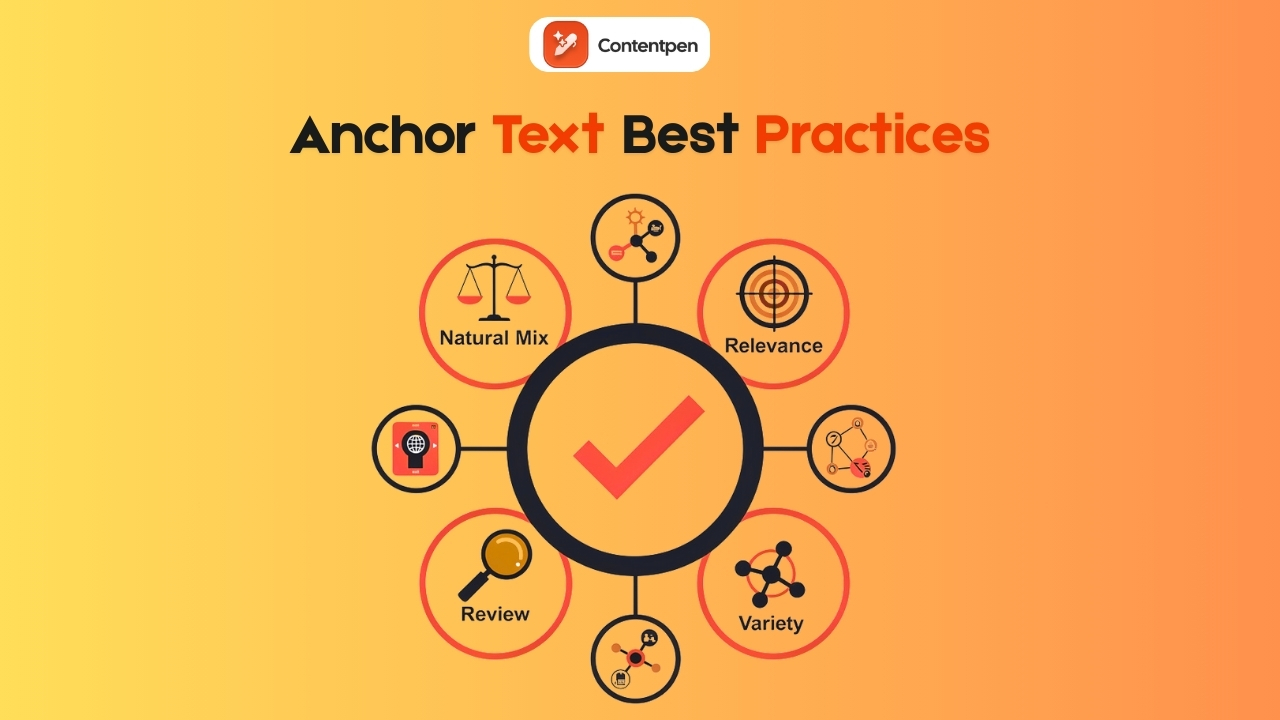

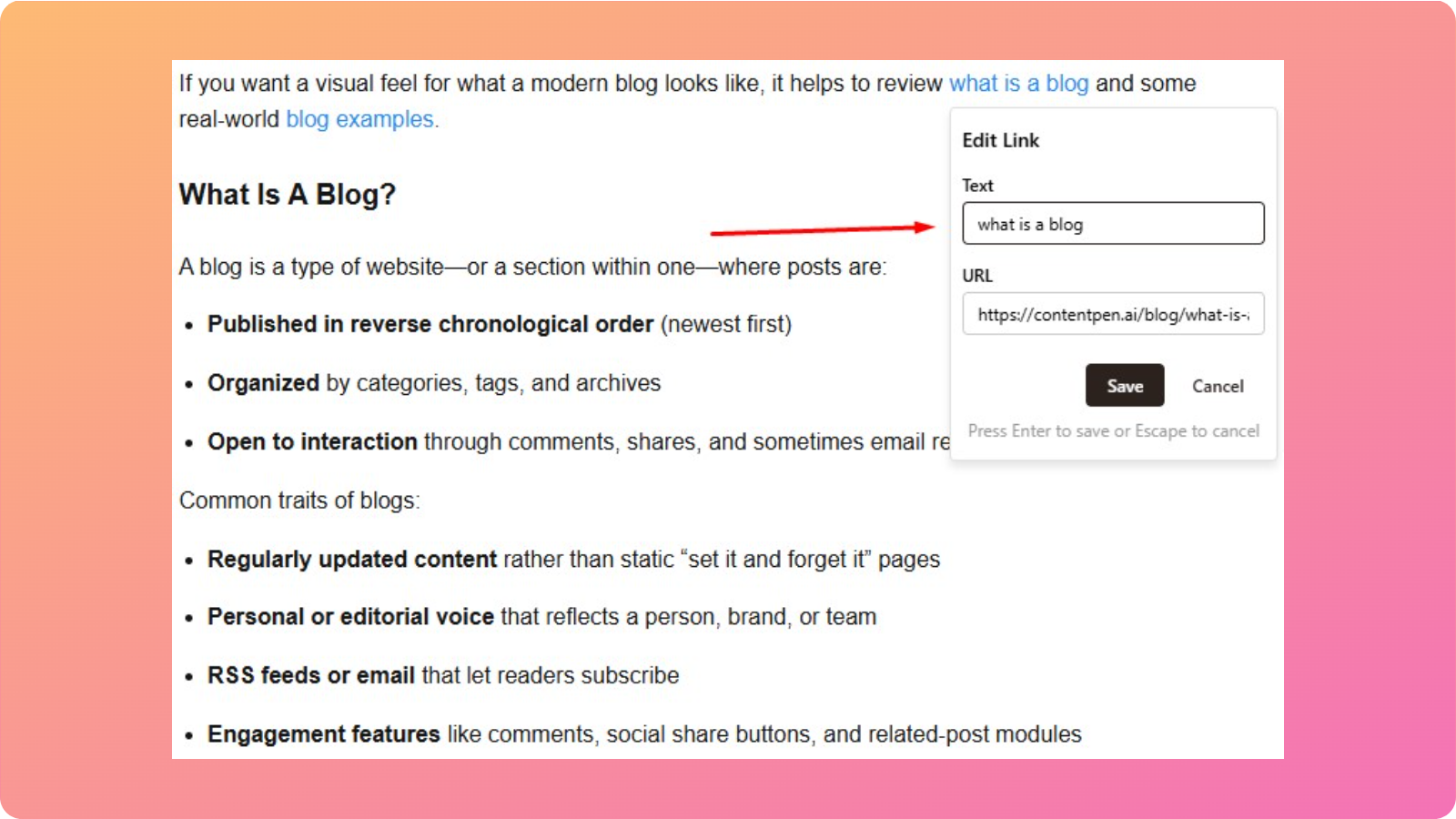

- Authority – This flows mostly through links. A strong backlink profile includes links from relevant sites, a natural mix of anchor text, and steady growth over time.

- User context and experience – Location, language, device type, and past behavior all influence which results someone sees. Technical factors such as page speed, mobile readiness, HTTPS, and clean site architecture also matter.

You cannot buy higher organic rankings.

Paid ads can give a site visible placements marked as sponsored, but the main results depend on how well the algorithm scores your pages across all these areas.

What you can do, instead, is focus on SEO basics rather than looking for shortcuts.

How SERP features change based on search intent

The SERP format shifts based on what the engine believes the user wants, often referred to as search intent.

- For local intent searches, such as pizza near me, the SERP often shows a map with nearby businesses, star ratings, and quick buttons to call or get directions. For these searches, local SEO basics and a strong Google Business Profile matter a lot.

- For informational intent searches, such as how to write a blog post, you may see a featured snippet at the top that pulls a short answer from one page. In this case, clear headings, direct answers, and well‑structured content can help you earn the top spot.

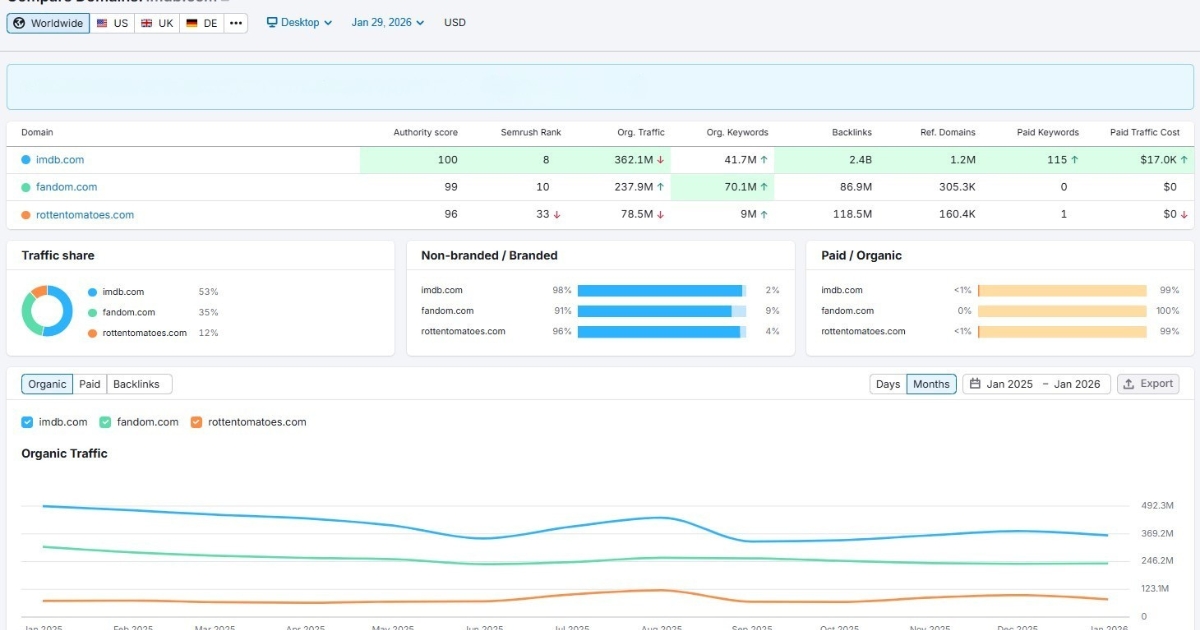

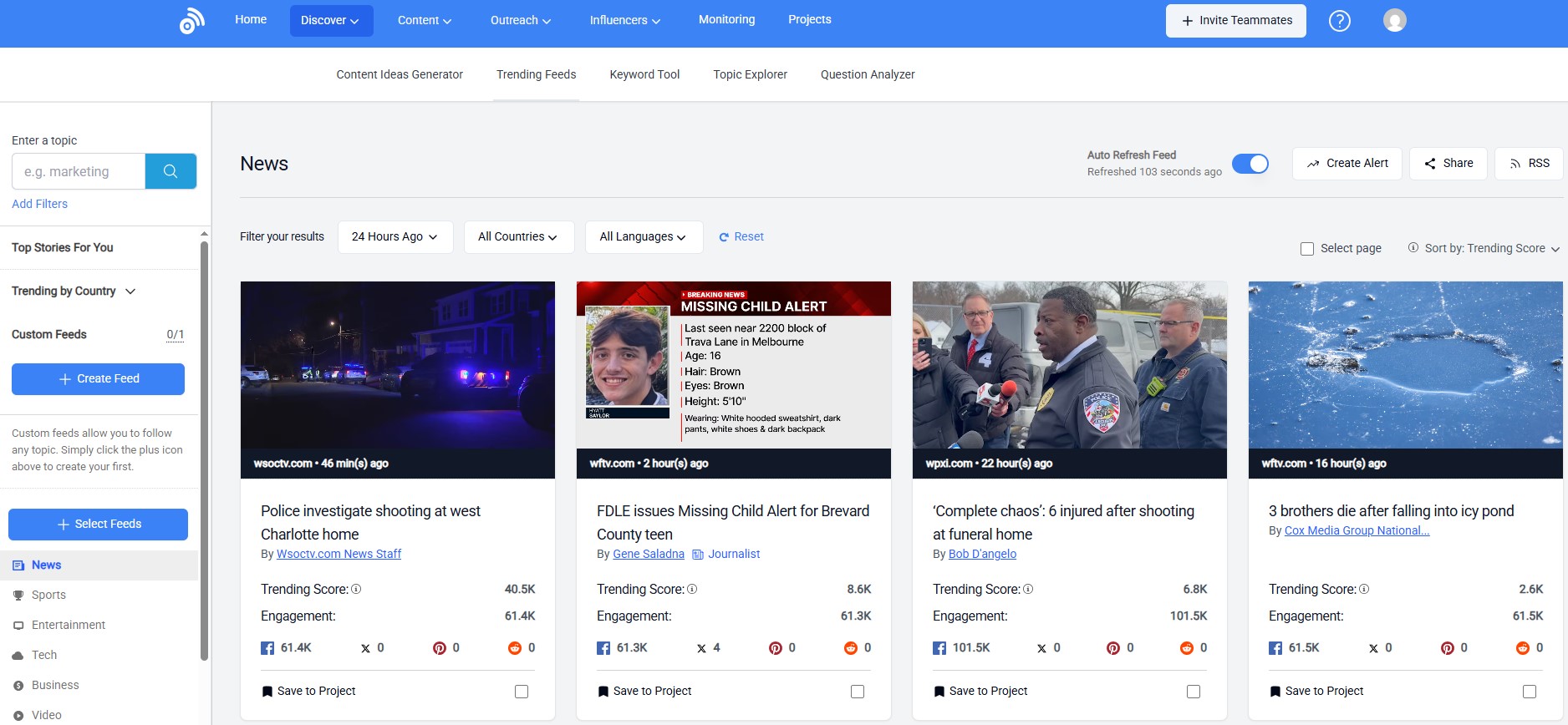

- In commercial intent searches, such as best SEO competitive analysis tools, review snippets, and product grids often appear. Comparison content, rich product data, and honest reviews do well here.

- For navigational intent searches, such as Contentpen login, results mostly show brand pages that help users quickly reach a specific site.

Studying these types of search intents before picking target keywords is a smart move. It shows what kind of content Google expects for each phrase and gives a clearer sense of how to shape your own pages for better SERP and AI Overview visibility.

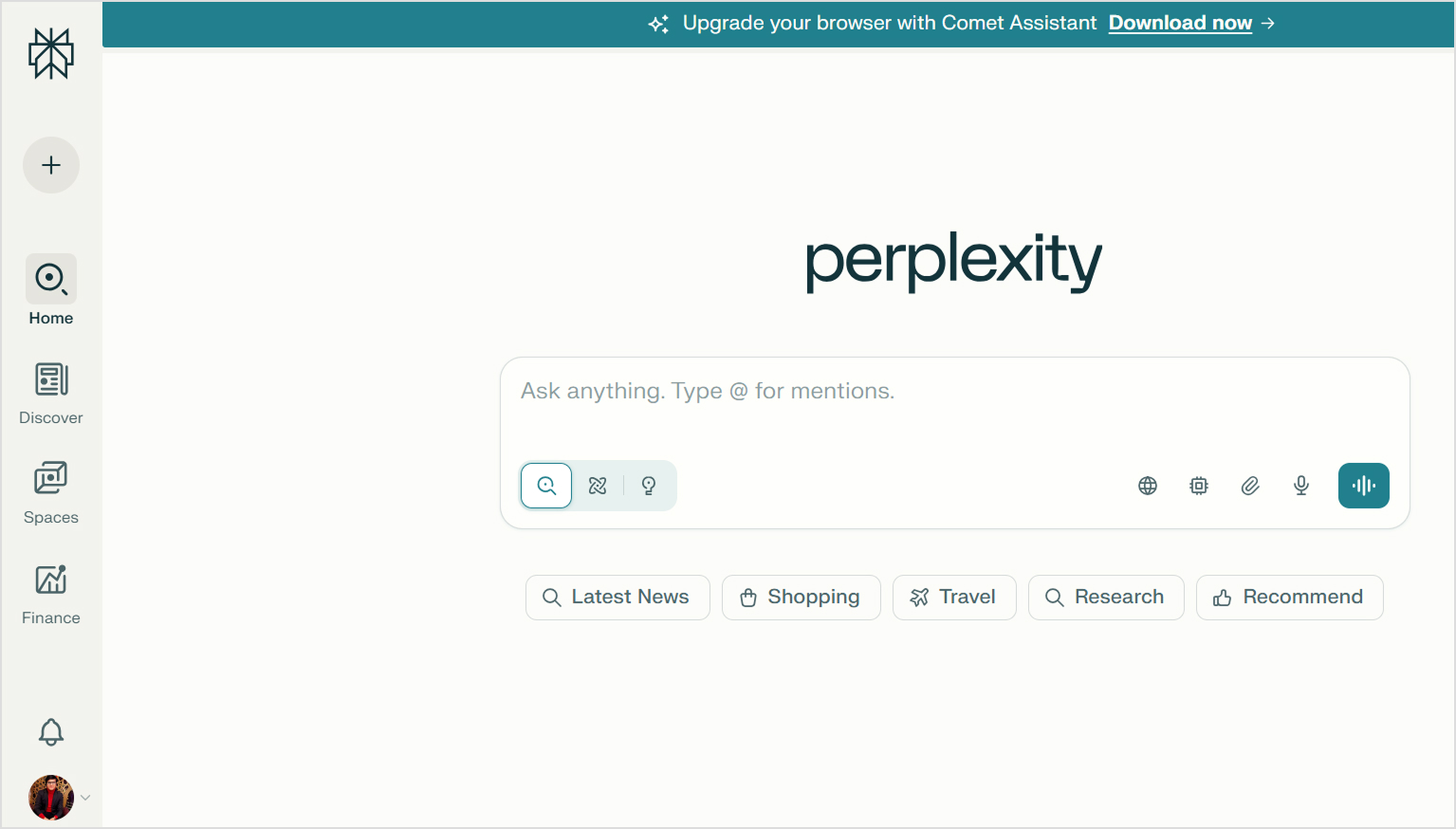

Why search results vary across Google, Bing, and AI search engines?

Search results can vary a lot from one engine to another, and even from one person to another on the same engine. The reasons lie in differences in indexes, algorithms, and personalization.

Each major search engine runs its own crawler bot and keeps its own index. Googlebot, Bingbot, and others start from different seed URLs, follow different paths, and may apply different rules about what to store.

On top of that, they use different ranking formulas. One engine might give more weight to backlinks, while another might pay closer attention to on‑page engagement signals or social cues. The exact formulas are treated as trade secrets and change over time.

Personalization adds another layer. Location, language settings, search history, sign‑in status, device type, and even browser can influence which results rise to the top for a given person.

From a practical standpoint, most US‑focused teams use Google as their primary source for SEO basics.

Still, it can be useful to check other engines for competitive research and to avoid stressing over small ranking shifts that may simply reflect personalization.

How to optimize for search engines with SEO fundamentals

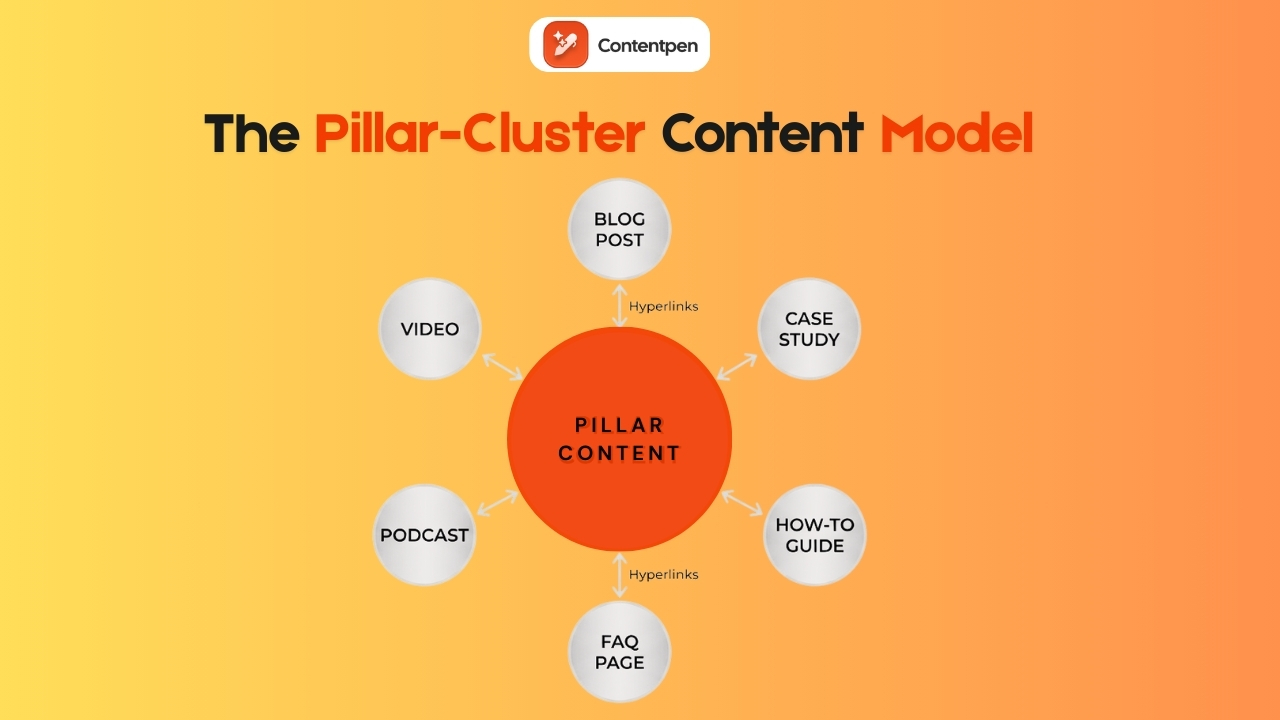

Good search engine optimization basics rest on three pillars:

- Access – Crawlers need clear paths and fast responses.

- Clarity – Algorithms need well‑organized, helpful content.

- Authority – Users and other sites need reasons to trust and recommend your pages.

Now, let’s see each of these aspects in more detail.

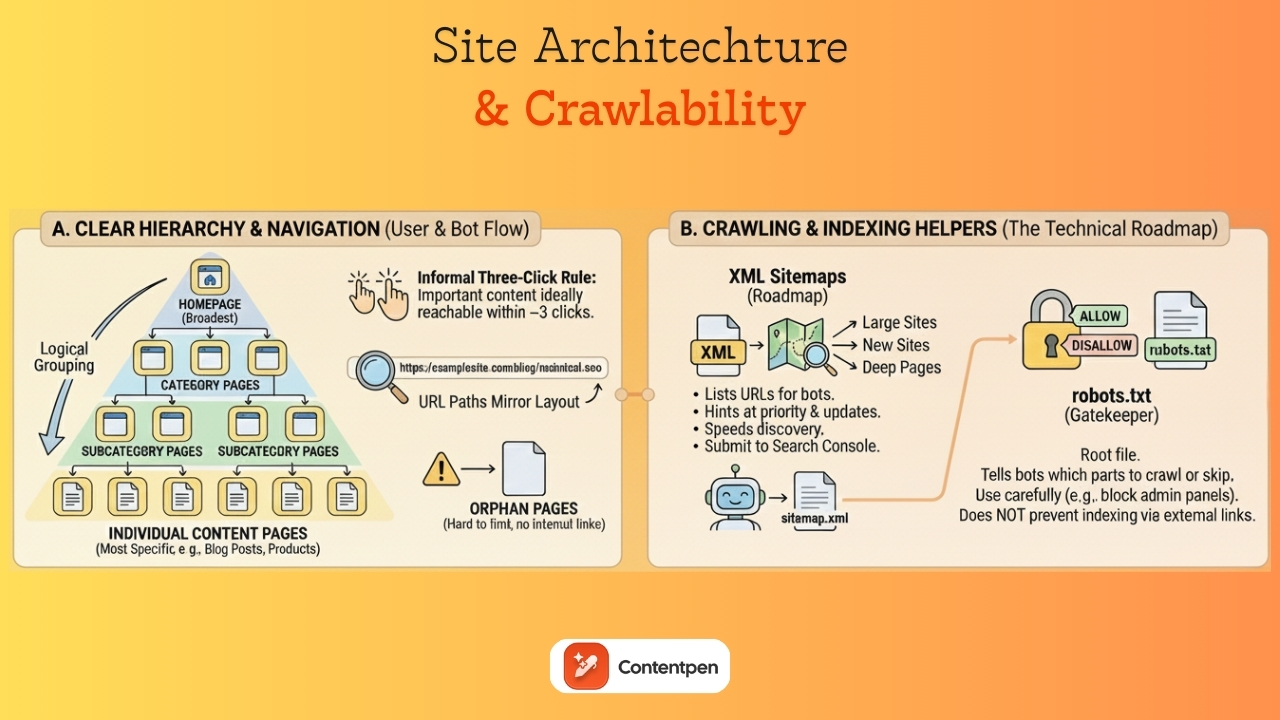

Crawling optimization to ensure content discoverability

Crawling optimization focuses on making your site easy and safe for bots to explore. Even small steps here can lead to better coverage.

- Create and submit XML sitemaps. Many content management systems automatically generate these files that list key URLs. By submitting them to GSC, you give crawlers a clear overview of your site.

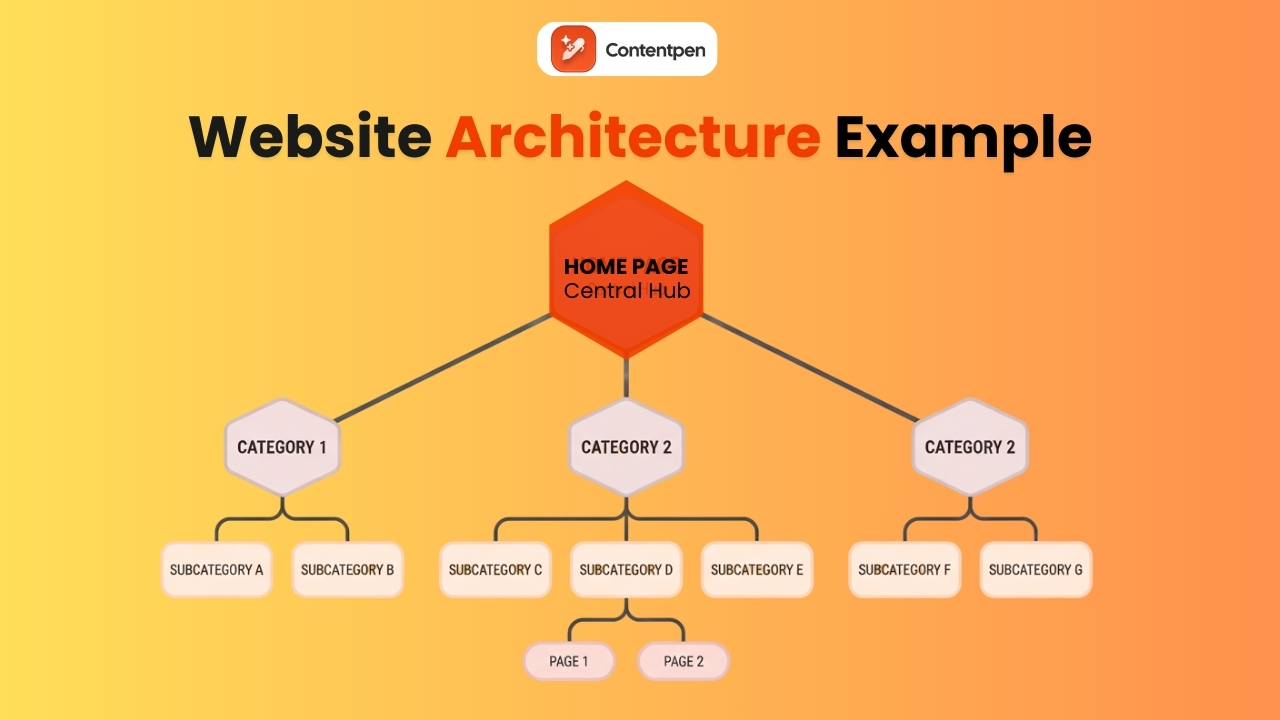

- Build a clear internal linking structure. Every important page should be reachable within a few clicks from the home page, ideally through logical category and subcategory paths.

- Keep robots.txt files clean and focused. Regular audits after site changes can catch accidental blocking of key folders. Monitoring crawl errors and coverage reports in Search Console lets you spot repeated issues, such as broken redirects or error pages.

On larger sites, considering crawl budget and trimming very low‑value pages or thin filters can keep crawlers focused on the URLs that matter most.

Indexing optimization to make your content index-worthy

Indexing optimization is about giving search engines content that is worth storing and easy to understand. It sits at the crossroads of content quality and technical setup.

High‑quality, original content remains the foundation. That means writing pieces that cover a topic in real depth, speaking from experience where possible, and adding examples or data that readers cannot get from a quick skim elsewhere.

When you combine this with thoughtful keyword use, you support both users and algorithms.

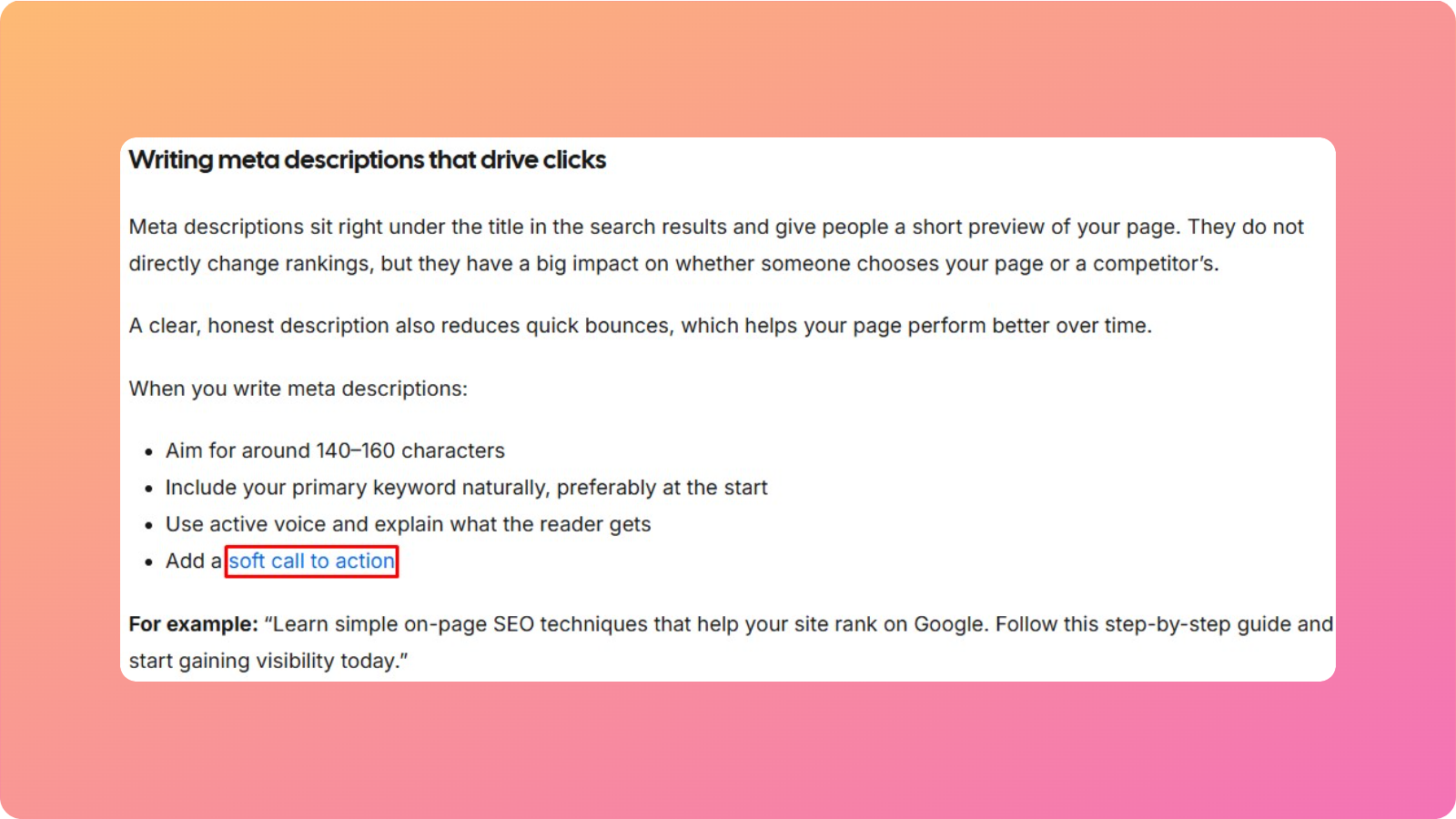

Our on-page SEO checklist helps convey that value:

- Clear, descriptive title tags that include primary keywords

- Honest meta descriptions that invite clicks

- A clean hierarchy of H1, H2, and H3 headings

- Descriptive alt text on images

Technical signals matter here as well. A mobile‑friendly design, HTTPS security, and clean canonical tags reduce noise and help the index represent your site correctly.

Structured data with schema markup can highlight products, reviews, FAQs, and more in a format the engine understands at a glance.

When you find thin pages with little value, either improve them or fold them into stronger related content so they do not weigh down the index profile.

Ranking optimization to compete for top positions

Ranking optimization pulls together keyword strategy, content quality, authority building, and user experience. This is where SEO marketing basics and more advanced tactics meet.

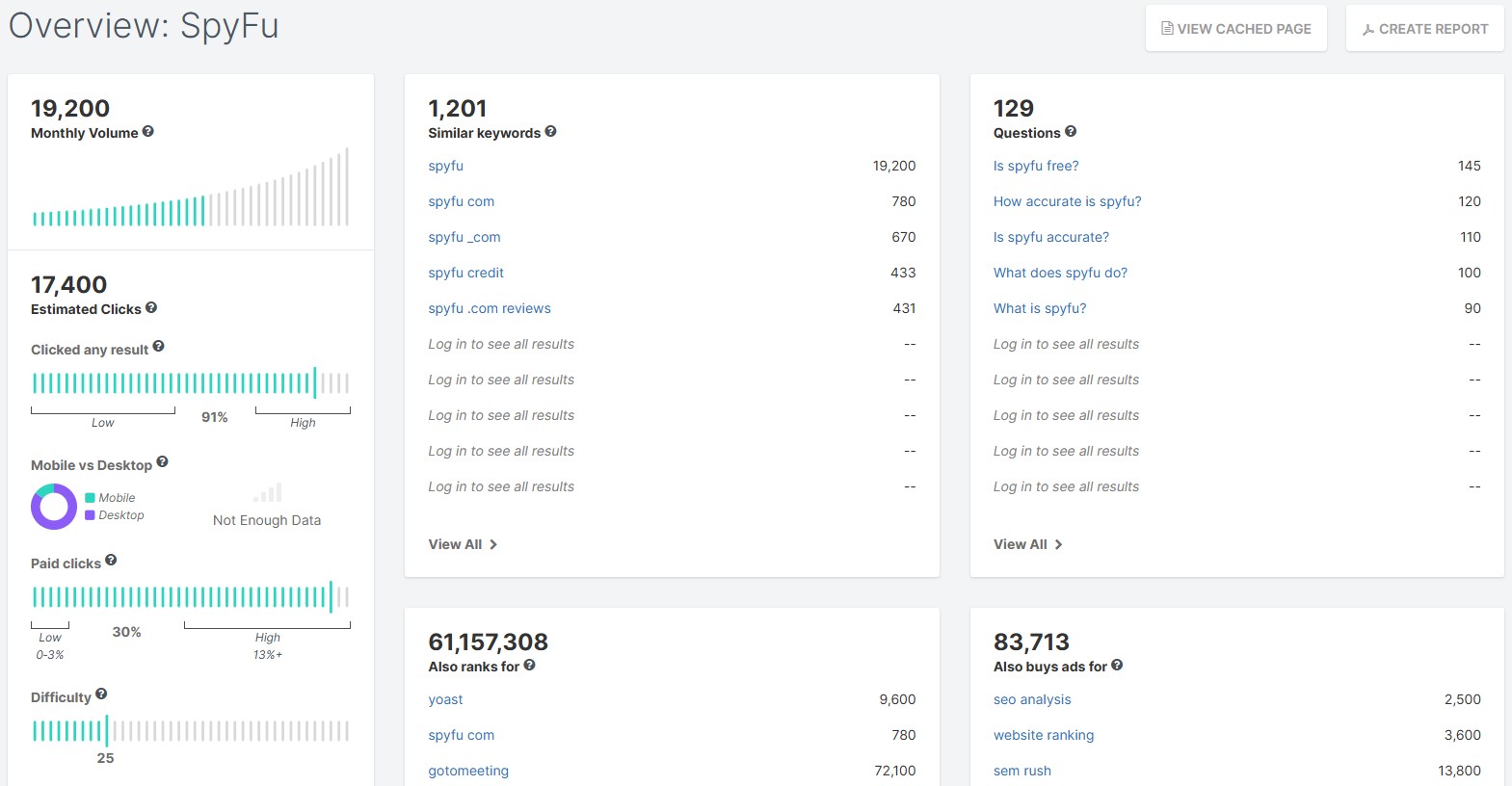

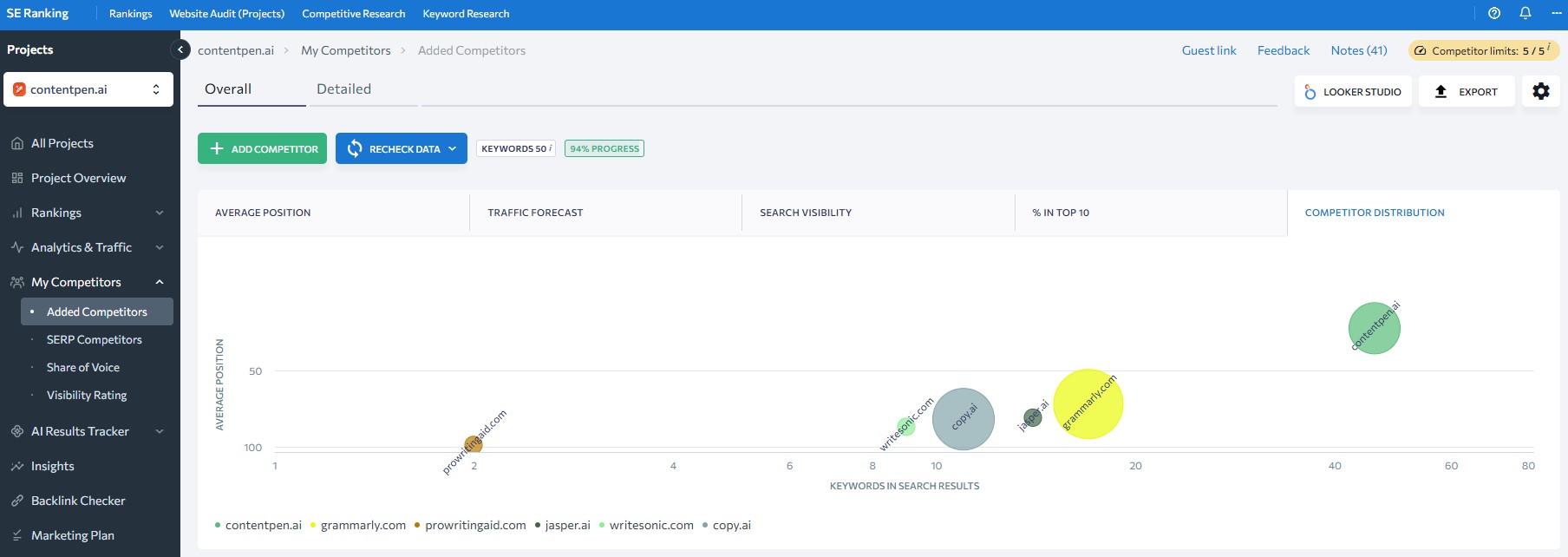

- Keyword targeting – Good research helps you find phrases with real search volume and clear intent that fit your business. Long‑tail keywords often offer a balance of focused intent and realistic competition, especially for newer sites.

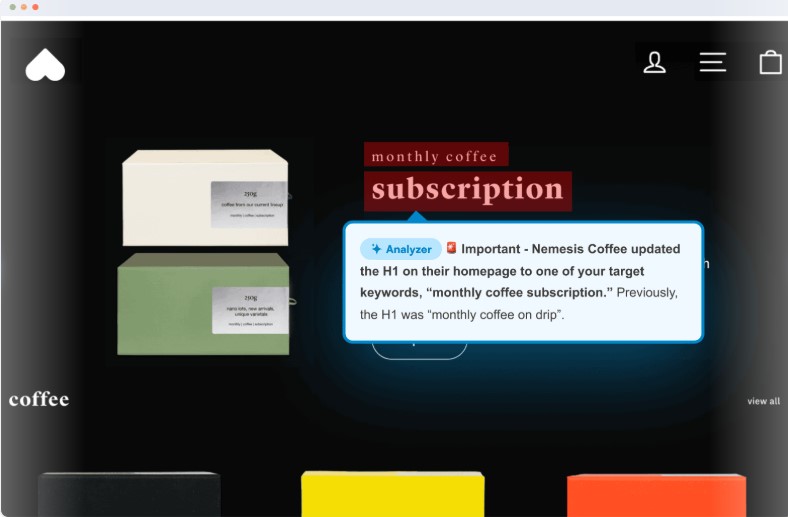

- Better content than current winners – Better content does not always mean longer, but it does mean writing articles that are clearer, more helpful, and provide up‑to‑date information. You can add original data, expert commentary, step‑by‑step walkthroughs, or visuals that make hard ideas simple.

- Authority building through backlinks – Earning links from strong, relevant sites through guest posts, partnerships, digital PR, and genuinely useful assets builds trust with algorithms. Avoid schemes that trade or buy links in unnatural ways.

- User experience – Fast load times, smooth scrolling, stable layout, and readable typography all help visitors stay longer and engage more. Clear calls to action and helpful internal links keep people exploring instead of bouncing.

For local businesses, claiming and optimizing a Google Business Profile, building consistent local citations, and gathering reviews play important roles in how well you show up in map packs.

Scale your SEO with Contentpen

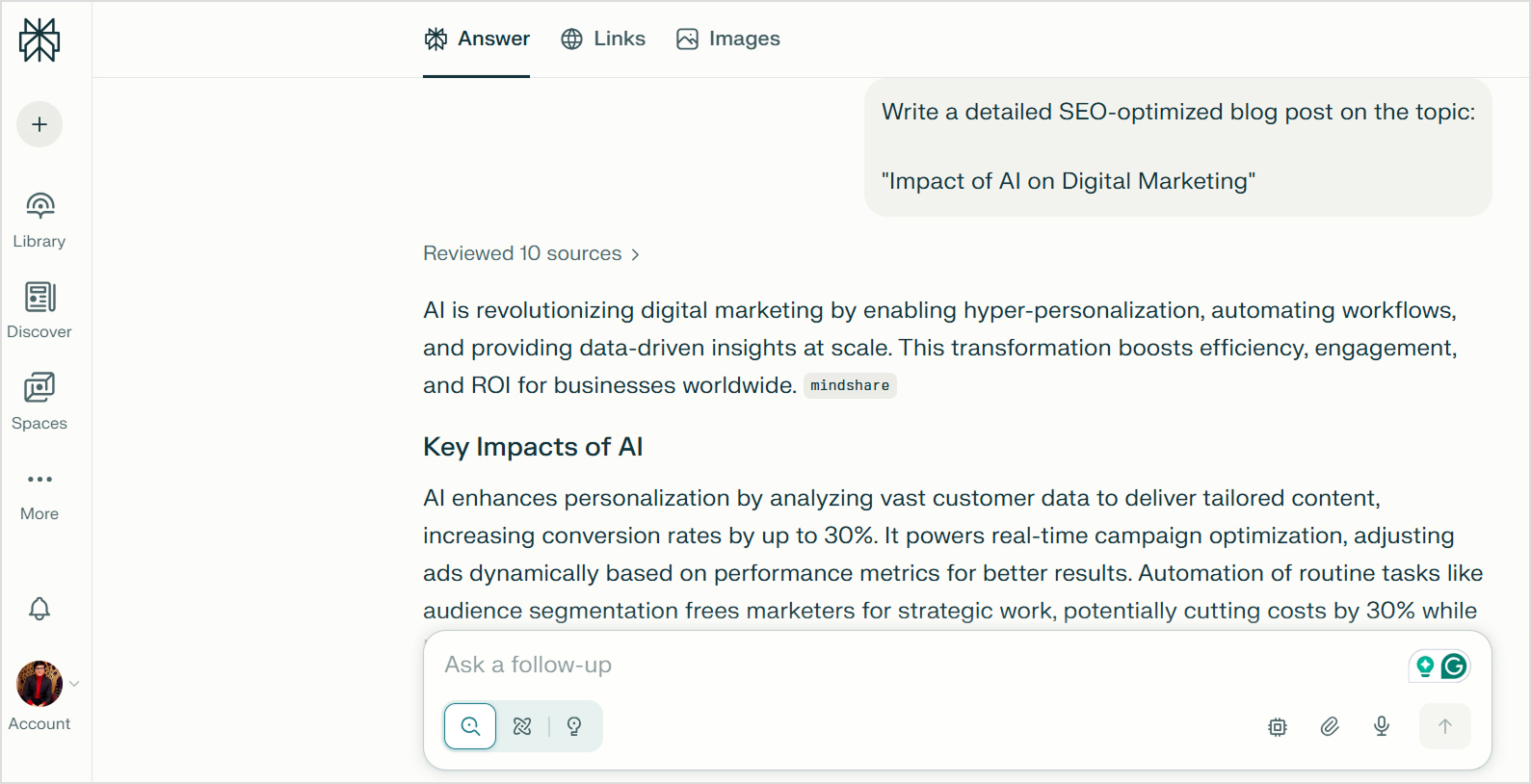

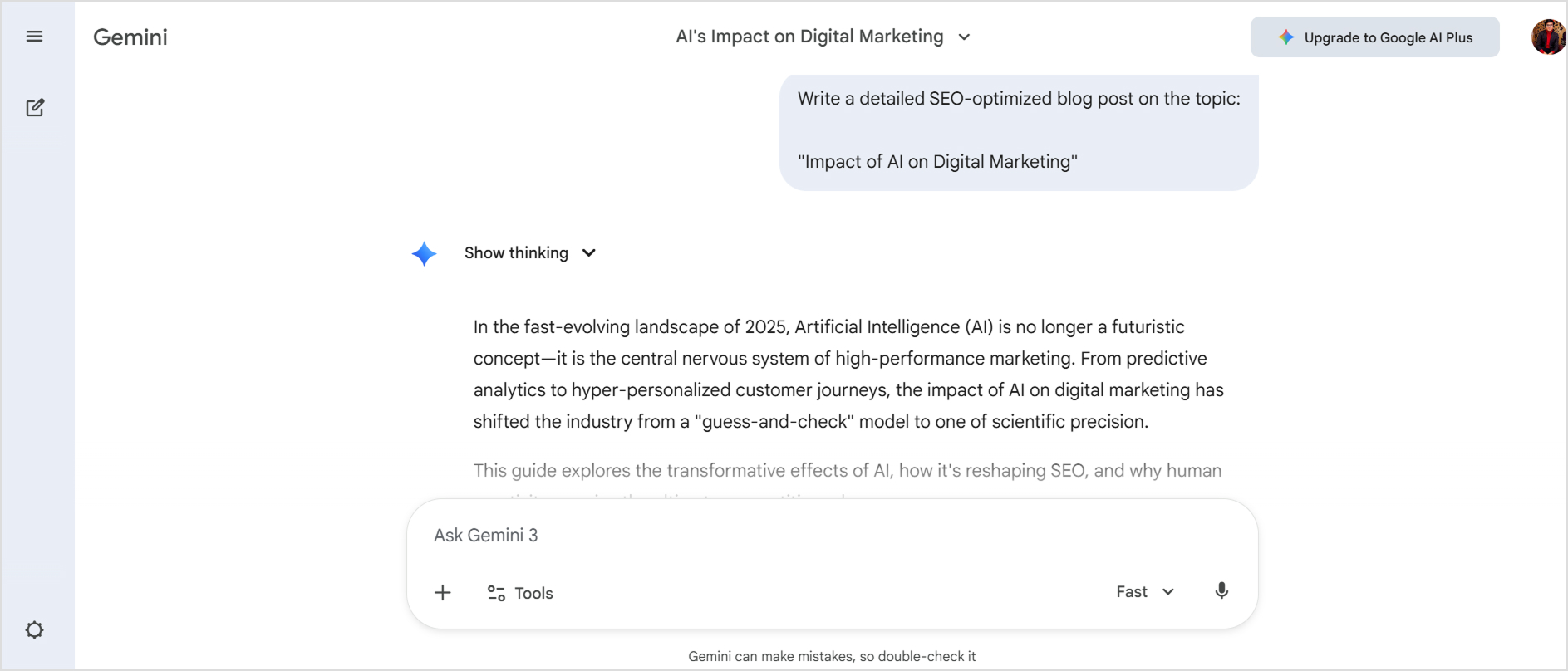

Optimizing content for search engines is a tough ask, especially if you have a lot of pages to manage. Researching topics, drafting or refreshing content, and even choosing and implementing the right keywords becomes a mess.

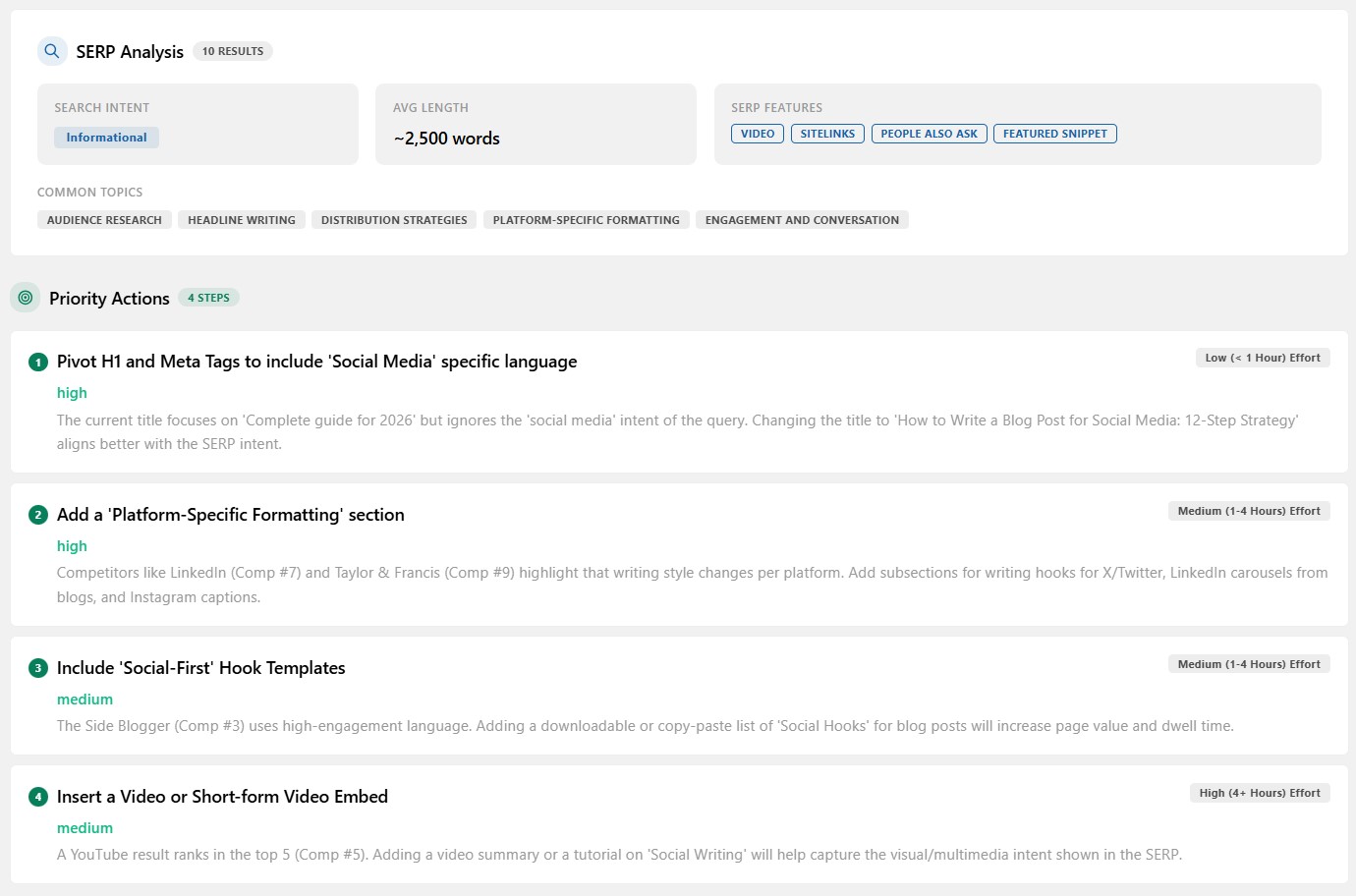

This is where a platform like Contentpen comes in. Our AI blog writing tool is built to help teams ship SEO‑focused content faster without losing quality. It can draft well‑structured articles that already follow search engine basics, suggest on‑page improvements as you work, and keep formatting consistent across your site.

Inside Contentpen, integrated SEO scoring highlights strengths and gaps in each draft. You can see whether titles use the right terms and whether headings fully cover the topic. Competitor insights show which topics and keywords others already win, so you can spot gaps and decide where a new article has a real chance to rank.

Once a piece is ready, one‑click publishing shortens the time between idea and live page, which helps Google crawl and index your work sooner.

For teams handling many clients or brands, this mix of AI‑powered drafting, real‑time SEO guidance, and fast publishing makes it much easier to maintain content velocity while sticking to core SEO fundamentals.

Final thoughts

Search engines may run on vast data centers and complex math, but the path from your page to a search result follows a simple three‑step process.

First, crawlers need to find and fetch your content. Then, indexing systems need to understand it and store it. Finally, ranking algorithms check whether your answers help resolve common user queries to display them in the top results.

When you understand search engine basics, SEO no longer feels like a mystery. It becomes a checklist you can apply to every new page and see organic traffic in no time.

Modern tools such as Contentpen can help your SEO and GEO efforts by planning, writing, and publishing SEO‑ready content at scale. The strategic thinking still comes from you, but the heavy lifting gets lighter.

Frequently asked questions

New pages can start appearing in search results within a few days, but it can also take several weeks. The speed depends on factors such as how often your site is crawled, how strong your domain is, and whether you submit the page via tools like Google Search Console.

You cannot set an exact crawl schedule, but you can influence how often bots visit. Fast, stable servers and fresh content published on a steady schedule encourage more frequent crawls. Clean sitemaps and strong internal links also help crawlers move through your site efficiently.

One quick method is to search Google using the site: operator, such as typing site:example.com plus a keyword related to your page. This shows pages from your domain that Google currently indexes.

Small ranking shifts from day to day are normal and happen to almost every site. Personalization can also change what you see compared to what others see. Competitor updates and new content entering the index can also add more movement.

Most evidence suggests that social metrics such as likes and shares are not direct ranking factors in Google’s core algorithm. However, social activity can still help SEO indirectly. When content spreads widely, more people discover it, and some of them may link to it on their own sites to give your platforms an organic boost.

No algorithm relies on a single signal, and search engines have repeatedly said that rankings are based on a mix of different factors. For starters, write high‑quality content that closely matches search intent, followed by strong, relevant backlinks and a solid technical foundation.